Leaderboard

Popular Content

Showing content with the highest reputation on 04/13/2022 in all areas

-

Hi @szabesz - I am heading on vacation tomorrow for a couple of weeks, so it will be a while before I can take a look at this. That said, I am not sure if I can actually do much about this. I actually wonder if this is something that should be raised over at: https://github.com/nette/tracy because I *think* it is something they would be better off fixing in the Tracy core.2 points

-

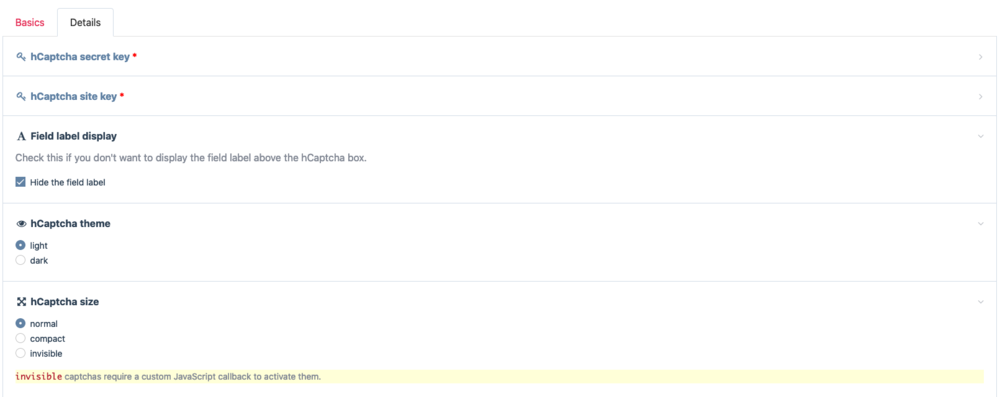

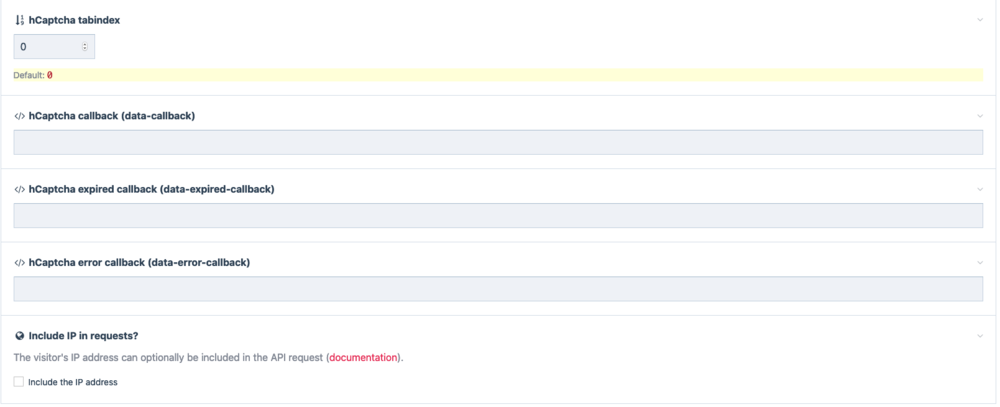

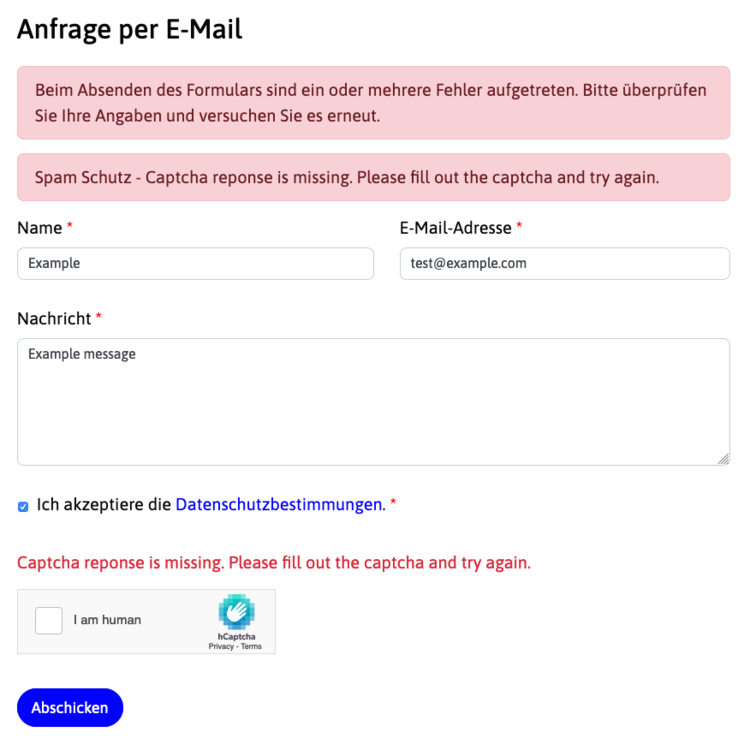

This module allows you to integrate hCaptcha bot / spam protection into ProcessWire forms. hCaptcha is a great alternative to Google ReCaptcha, especially if you are in the EU and need to comply with privacy regulations. The development of this module is sponsored by schwarzdesign. The module is built as an Inputfield, allowing you to integrate it into any ProcessWire form you want. It's primarily intended for frontend forms and can be added to Form Builder forms for automatic spam protection. There's a step-by-step guide for adding the hCaptcha widget to Form Builder forms in the README, as well as instructions for API usage. Features Inputfield that displays an hCaptcha widget in ProcessWire forms. The inputfield verifies the hCaptcha response upon submission, and adds a field error if it is invalid. All hCaptcha configuration options for the widget (theme, display size etc) can be changed through the inputfield configuration, as well as programmatically. hCaptcha script options can be changed through a hook. Error messages can be translated through ProcessWire's site translations. hCaptcha secret keys and site-keys can be set for each individual inputfield or globally in your config.php. Error codes and failures are logged to help you find configuration errors. Please check the README for setup instructions. Links Github Repository and documentation InputfieldHCaptcha in the module directory Screenshots (configuration) Screenshots (hCaptcha widget)1 point

-

Congrats on releasing Padloper 2 ? Can you show us a feature comparison list between Padloper 1 & 2? I'm Padloper 1 (dev) owner and I would like to know if it's really worth paying x6 the price of the first version. Thanks in advance.1 point

-

Well, in the end all it took was another minute, another try. If anyone has the same issue, here's the solution: just enter this... parent=page.id ...in the field's selector string. Ta-da!1 point

-

thanks for this hint! ? in between i changed the whole site structure to a more logical tree approach with the artworks now forced to be children of an artist. that works very well now ?1 point

-

Hello, @tires! Code 250 OK means that everything went well and your message was delivered to the recipient server. It is possible that privacy settings block receiving of messages.1 point

-

This thing is heading to 1M views soon by the likes of it! @ryan, this ? one is for you ?:1 point

-

In the past when a client has asked us to show PDFs in the browser rather than being downloaded we've used Mozilla PDF.js and then created a link that passes the doc name in the form pdfjs/web/viewer.html?file=whatever.pdf In this day and age though I'd question whether this is really necessary. Most browsers have built in pdf viewers anyway : https://caniuse.com/pdf-viewer and if you're overriding the users choice as to which pdf viewer they use then it's probably not great for usability.1 point

-

Maybe not a solution to your problems, but have you already tried https://processwire.com/modules/tracy-debugger/ ? I think it does what you are looking for and so much more ?1 point

-

Hi @ttbb welcome to PW and the community ? one solution would be to enable url segments on the template of /artworks page. https://processwire.com/docs/front-end/how-to-use-url-segments/ That means you'd have an url like /artworks/[artist]/[artwork] and you'd have the artist in $input->urlSegment1 and the artwork in $input->urlSegment2 Does that help?1 point

-

Hello Adrian, I am working on my very first Unpoly 2 driven frontend and found that either Tracy's or Unpoly's JavaScript break in some cases. I get errors in the console like this: Uncaught TypeError: this.elem is null Panel http://mydomain.test/products/?_tracy_bar=js&v=2.9.1&XDEBUG_SESSION_STOP=1:29 loadAjax http://mydomain.test/products/?_tracy_bar=js&v=2.9.1&XDEBUG_SESSION_STOP=1:444 loadAjax http://mydomain.test/products/?_tracy_bar=js&v=2.9.1&XDEBUG_SESSION_STOP=1:442 <anonymous> http://mydomain.test/products/?_tracy_bar=content-ajax.5de8b397b6_2&XDEBUG_SESSION_STOP=1&v=0.44454897717264863:1 The above example starts happening when I first use the browser's back feature. I tried to relocate unpoly.js to body's end from the head but to no avail. Either Unpoly breaks or Tracy's JS, depending on the loading order. Note, that Unpoly recommends using its own event handling methods: https://unpoly.com/up.compiler as its automated event handling might interfere with "random JavaScript", as we probably see in this example. Are you willing to deal with this issue? If so, what else should I do to help you make them work together? Thanks in advance,1 point

-

Hi @Ivan Gretsky - I am heading on vacation for a couple of weeks as of tomorrow and then of course it will be crazy catching up when I'm back, but thanks for the GH issue. I will try to take a look as soon as I can. Sorry I never got back to it before.1 point

-

Actually, ignore me again, it's there on production.1 point

-

Back with more! Prepare for incoming wall of text... I mentioned adding custom directives to our .htaccess file and wanted to share some more detail on that as well as some other tips. I was reviewing our 404s as a matter of maintenance so to speak to ensure that we had redirects in place as necessary. While reviewing that I found a lot (a lot) of hits that were bogus, clearly bots and even web crawlers for engines we have no interest in being listed for. What I found was in just 48 hours we had 700 total 404s and I imagine on some websites that number could be higher. By analyzing that log and writing custom directives I was able to take 700 404s logged by ProcessWire down to 200 which are "legitimate" in that it's traffic that to be redirected to a proper destination page. I'm sharing my additional directives here as an example. Again, ANY bot/security directives should be at the very top of your .htaccess file. As always, test test test, and modify for your use case. # Declare this at the top of your .htaccess file and remove or comment out all other instances of this directive elsewhere RewriteEngine On # Block known bad URLs # Directories including sub-directories RedirectMatch 404 "\/(wp-includes|wp-admin|wp-content|wordpress|wp|xxxss|cms|ALFA_DATA|functionRouter|rss|feed|feeds|TKVNP|QXXLZ|data\/admin)" # Top level directories only - There are no assets served from these directories in root, only from /site/assets & /site/templates RedirectMatch 404 "^/(js|scripts|css|styles|img|images|e|video|media|shwtv|assets|files|123|tvshowbiz)\/" # Explicit file matching RedirectMatch 404 "(1index|s_e|s_ne|media-admin|xmlrpc|trafficbot|FileZilla|app-ads|beence|defau1t|legion|system_log|olux|doc)\.(php|xml|life|txt)$" # Additional filetypes & extensions RedirectMatch 404 "(\.bak|inc\.)" # Additional User Agent blocking not present in 7G Firewall <IfModule mod_rewrite.c> # Chinese crawlers that cause significant traffic to bad URLs RewriteCond %{HTTP_USER_AGENT} Mb2345Browser|LieBaoFast|zh-CN|MicroMessenger|zh_CN|Kinza|Datanyze|serpstatbot|spaziodati|OPPO\sA33|AspiegelBot|aspiegel|PetalBot [NC] RewriteRule .* - [F,L] </IfModule> Details on this additional config: It blocks some WP requests that get past 7G My added directives redirect to a 404 which tells the bot that it flat out doesn't exist rather than 403 forbidden which could indicate it may exist. I read somewhere that this is more likely to get cached as a URL not to be revisited (wish I could remember the source, it's not a major issue). Blocks a lot of very specific URLs/files we were seeing Blocks Chinese search engine bots, because we don't operate in China. These amounted to a lot of traffic. Blocks common dev files like .bak and .inc.* which aren't protected by default. Obvs you want to eliminate .bak altogether in production, but added safety fallback. I have not seen this cause any issues in the Admin. Also consider if directives could cause problems in another language. Customize by reviewing your logs Additional measures 7G and the directives I created are a healthy amount of prevention of malicious traffic. Another resource I use is a Bad Bot gist that blocks numerous crawlers that add traffic to your site but may or may not generate 400s-500s HTTP statuses. This expands on 7G's basic list. Bad Bot recommendations: Comment out: SetEnvIfNoCase User-Agent "^AdsBot-Google.*" bad_bot There's not really a good reason to block a specific Google bot If you make Curl requests to your server then comment this line out: SetEnvIfNoCase User-Agent "^Curl.*" bad_bot Reason: this will block all Curl requests to your server, including those by your own code. Be sure that you don't need Curl available if leaving this active. This is included in the list to prevent some types of website scrapers. If you want to leave this active and still need to use Curl, then consider changing your User Agent. Comment out: SetEnvIfNoCase User-Agent "^Mediapartners-Google.*" bad_bot Again, not necessary to block Google's bots, might even be a bad idea for SEO or exposure (only they know, right?). Testing There's no such thing as too much testing. These directives are powerful and while written well, may have edge cases (like 'null' mentioned previously). There's no replacement for manual testing, specifically it would be a good idea to test any marketing UTMs or URLs with GET strings you may have out there just in case. For automated testing I use broken-link-checker which can be called from the terminal or as a JS module. I prefer this method to using some random site scanning service. This will detect both 404s and 403s by scanning every link on your page and getting a response which is useful for ensuring that your existing URLs have not been affected by your .htaccess directives. broken-link-checker recommendations: Consider rate limiting your requests using the --requests flag to set the number of concurrent requests. If you don't you could run into rate limits that your managed hosting company, CDN, or you (if you're like me) have built into your own server. This terminal app runs fast so if you have a lot of links or pages those requests can stack up quickly. Consider using the -e flag, at least initially while testing your directives. This excludes external URLs which will help your test complete faster and prevent any false positives if you have broken external links (which you can handle separately). Consider using the -g flag which switches the request to GET which is what browsers do. Shortcut, just copy and paste my command: blc https://www.yoursite.com -roegv --requests 5 If you have access to your Apache access log via a bash/terminal instance then you may consider watching that file for new 404/403 entries for a little bit. You can do this by navigating to the directory with your access log and executing the following command (switch out the name of your log as needed): tail apache.access.log -f | grep "404 " You may consider also checking for 403s by changing out the HTTP status in that command. "This seems excessive" I think this is good for every site and once you get it dialed in to your needs can be replicated to others. There's no downside to increasing the security and performance of your hosting server. Consider that any undesirable traffic you block frees up resources for good traffic, and of course reduces your attack surface. If you need to think about scalability then this becomes even more important. The company I work for is looking to expand into 2 additional regions and I'd prefer my server was ready for it! If you get into high traffic circumstances then blocking this traffic may prevent you from needing to "throw money at the problem" by upgrading server specs if your server is running slower. Outside of that, it's just cool knowing that you have a deeper understanding of how this works and knowing you've expanded your developer expertise further. This isn't meant to be an exhaustive guide but I hope I've helped some people get some extra knowledge and save everyone a few hours on Google looking this up. If I've missed anything or presented inaccurate/incomplete information please let me know and I will update this comment to make it better.1 point

-

I was thinking about this argument and this is something I also wish that could be improved. There doesn't seem to be a roadmap anymore. The current roadmap is from 2019: https://processwire.com/about/roadmap/ I think it would be nice to revive the roadmap or at least make a poll for what the community is wishing for the most. The last poll was at the beginning of 2021. The current approach that a feature will be added if @ryan has the need for it on a project is not bad, but that leads to many developer centric and less client centric features, which I personally often don't use, because there are already so many developer centric features. ?1 point

-

I haven't checked pages with output that the others use, but one page that uses the Srcset Image Textformatter should take something like <img src="image.jpg"> in a CKEditor field and transform it to: <img src="image.jpg" srcset="image_320.jpg 320w, image_480.jpg 480w"> based on settings you enter into the module for the size variants. The output is just the normal non-srcset version of the image.1 point

-

Interesting discussion, and I'll add a rather ironic perspective. I inherited a largely non-functional website project that someone else had built in Craft, about 3 years ago, and at the time I found the Craft documentation wasn't particularly clear, whereas I worked out how to use ProcessWire after about 20 minutes of reading the documentation. I converted the whole project to ProcessWire, and actually got the project functional. I had to write several custom modules and deal with a lot of hooks and set up CRON jobs to interact with a third party API, and I found ProcessWire a pleasure to work with. That said, I can understand that there are pain points for some people with ProcessWire, and it's always good to look at other systems to see how they do things in case there's something useful that could be incorporated into ProcessWire. My personal preference other than ProcessWire is Umbraco, which is built on ASP.Net rather than PHP, but in many respects is similar, so I don't have a problem jumping between PHP for ProcessWire and Razor syntax and C# for Umbraco. It also is an open source project, but with commercial add-ons like a form builder (which makes ProcessWire FormBuilder look cheap). Now that .Net and SQL Server run on Linux, it's potentially even more appealing to me, although ProcessWire is still my first choice. Currently I'm a solo developer, but as my workload grows, and also clients are starting to ask for an insurance policy in case something happens to me, the potential to be able to manage collaboration on projects is something that's increasingly on my mind, and out of the box, this isn't something that ProcessWire handles, although there are third party solutions like RockMigrations by@bernhard1 point

-

Yes. This has annoyed me several times. Not sure why I did not think of disabling session fingerprinting in my local dev config... $config->sessionFingerprint = false; ?1 point

-

Some of you might of used my previous U2F module for their two factor needs. Well I was recently informed that Chrome is dropping plain U2F support in favour of WebAuthn. So after a full day of debugging some cryptic errors I am proud to announce a WebAuthn module. This has some major improvements. For example you can now use on-device credentials like Windows Hello/Apple Touch ID. This means that even people without a Yubikey can benefit from modern two factor authentication. It also has much better cross platform support. For example NFC will now work on an iPhone. I do not recall the original U2F stuff working well on iPhones so yay? The is still the original issue that ProcessWire imposes with its Tfa class, That being it is a setup once and never edit again system so you can only add your on-device credentials for a single device because once saved you cant then edit your credentials on a second device. You also lack the options to revoke a single credential or add a new one. You have to wipe out the config and re-add your keys again. It sucks but realisticly if you need more than 3x credentials your almost defeating the point of Tfa I feel the need to also point out that this does not replace passwords. That is something WebAuthn can do a fully passwordless setup. But I think implementing that inside ProcessWire would be a huge challenge. It is frankly a form of magic that I was able to make WebAuthn work within the confides of ProcessWire's Tfa class. Github: https://github.com/adamxp12/TfaWebAuthn ProcessWire Modules: https://processwire.com/modules/tfa-web-authn/ I hope this module helps you guys out securing your ProcessWire websites If you have any issues just reply and I will do my best to help you out1 point

-

It looks great! Wanted to test but got: "This module does not indicate compatibility with this version of ProcessWire." Is it safe to use it anyway? I think @adrian suggestion is correct to add PW 3.x compatibility ?1 point

-

This is the line https://github.com/netcarver/PW-FieldtypeTime/blob/4332e922d6c8e98b64e8e6e408ad7c71e318ffff/InputfieldTime.module#L83 So for the time being, in InputfieldTime.module change line 83 to something like $attrs['placeholder'] = "00:00";1 point