-

Posts

17,132 -

Joined

-

Days Won

1,655

Everything posted by ryan

-

This week I've bumped the dev branch version to 3.0.241. Relative to the previous version, this one has 29 commits with a mixture of issue resolutions, new features and improvements, and other minor updates. A couple of PRs were also added today as well. This week I've also continued work on the FieldtypeCustom module that I mentioned last week. There were some questions about its storage model and whether you could query its properties from $pages->find() selectors (the answer is yes). Since the properties in a custom field are not fixed, and can change according to your own code and runtime logic, it doesn't map to a traditional DB table-and-column structure. That's not ideal when it comes to query-ability. But thankfully MySQL (5.7.8 and newer) supports a JSON column type and has the ability to match properties in JSON columns in a manner similar to how it can match them in traditional DB columns. Though the actual MySQL syntax to do it is a little cryptic, but thankfully we have ProcessWire selectors to make it simple. (It works the same as querying any other kind of field with subfields). MySQL can also support this with JSON encoded in a more traditional TEXT column with some reduced efficiency, though with the added benefit of supporting a FULLTEXT index. (Whereas the JSON column type does not support that type of index). For this reason, FieldtypeCustom supports both JSON and TEXT/MEDIUMTEXT/LONGTEXT column types. So you can choose whether you want to maximize the efficiency of column-level queries, or add the ability to perform text matching on all columns at once with a fulltext index. While I'm certain it's not as efficient as having separate columns in a table, I have been successfully using the same solution in the last few versions of FormBuilder (entries), and have found it works quite well. More soon. Thanks for reading and have a great weekend!

- 2 replies

-

- 26

-

-

-

Since it looks like there is a some crossover with Mystique, and it also looks like that module is active and supported, I'll release this module in ProFields instead. That way it's not competing with Mystique, which looks to already be a great module. I'll focus on making Custom fields have some features that maybe aren't available in Mystique, like ability to query from pages.find and perhaps supporting some more types, etc. Plus, the new module fits right in with the purpose of ProFields and a good alternative to Combo where each have a little bit different benefits to solve similar needs.

-

@Jonathan Lahijani Sort of, but it's not just that. I'll go into the details once I've got the storage part fully built out. It supports any Inputfield type that can be used independently of a Fieldtype. Meaning, the same types that could be used in a module configuration or a FormBuilder form, etc. It can be used IN repeater fields (tested and working), and likely in file/image custom fields as well (though not yet tested). But you wouldn't be able to use repeaters or files/images as subfields within the custom field. Files/images will likely be possible in a later version though, as what's possible in Combo will also be possible here. Yes, if you use the .php definition option (rather than the JSON definition option) then there are $page and $field variables in scope to your .php definition file. @MrSnoozles Searching yes. Can be used in repeaters (yes), but can't have repeaters used as subfield/column types within it. @poljpocket Yes. While I've not fully developed the selectors part of it yet, the plan is that it will support selectors with subfields for searching from $pages->find(), etc. @wbmnfktr The API side of this should work like most other Fieldtypes with subfields, so I think it should be relatively simple for importing and may not need any special considerations there, but I've not tried using it with a module like ImportPagesCSV yet.

-

@BrendonKoz Looks like Mystique defines fields (subfields?) in a very similar way, in that it is also using the Inputfields settings/API (as an array) to define them. Though looks like the use of the "type" property might be something different, since it is mapping to a constant in the module rather than the name of an Inputfield. Maybe those constants still map to Inputfield names. Mystique looks good, perhaps I could have just used Mystique to solve the client's request! Though admittedly I have a lot of fun building Fieldtypes and Inputfields, and I'm guessing there will be just as many differences as similarities, but glad to see there are more options. @Kiwi Chris The syntax I'm using is just the Inputfields API. Looks like the Mystique field above also uses the same API as well. Nice that there is some consistency between these different use cases. That's good to know. Though in this case it was also that I needed a hierarchy of fieldsets which Combo doesn't support. Plus wanted something a little more lightweight, because there were so many fields and the client just needs the ability to store them and not so much to query them. I don't know if Combo is compatible with the migrations module or not, but always good to know about the options. I usually do prefer to manage stuff in the admin, but this is a case where it just seemed like it was not going to be fun.

-

@wbmnfktr Each "custom" field is just one "real" field in the admin, with any number of subfields within it. The subfields are not themselves ProcessWire fields. Instead, the subfields are similar to subfields/columns in Combo fields. If using the JSON configuration, you can technically edit it in the admin (Setup > Fields) if you want to, rather than maintaining a file. But I figure most probably won't be editing JSON from their browser. You can however configure other settings related to the custom field in Setup > Fields > your_custom_field, such as usual ones (label, description, icon, etc.), plus others like entity encoding when output formatting is on, whether the subfields should have an outer wrapping fieldset (same as the hideWrap setting in Combo), and more. @Kiwi Chris I am not that familiar with Rock Migrations, but I'm thinking we might be talking about different things as this is a Fieldtype and Inputfield, not a module related to migrations. It doesn't create any new fields or anything like that, but it lets you define the structure of subfields within a "custom" field.

-

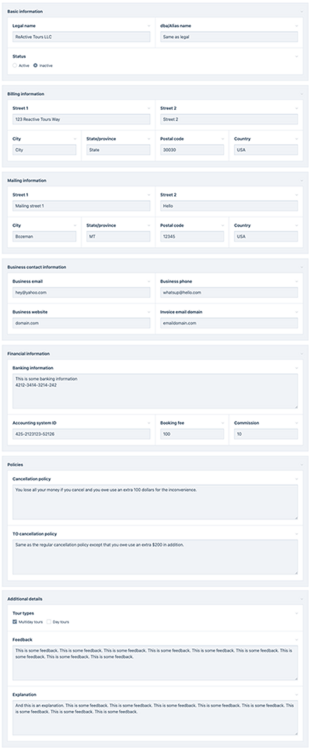

This week I received some client specifications for project that indicated it was going to need a lot of fields, and fields within fields. There are a lot of tools for this kind of stuff in ProFields, but none that I thought would make this particular project "easy" per se. The closest fit was the Combo field, but with 40-50 or so subfields/columns within each of the "groups", it was still going to be kind of a pain to put together. Not to mention, just a lot of fields and subfields to manage. To add more challenge to it, there was another group of 30 or so fields that needed to be repeatable over any number of times (in a repeater field). I wasn't looking forward to building out all these fields. That scale of fields/subfields is at the point where I don't really want to build and manage it interactively. Instead, I want to just edit it in a code editor or IDE, if such a thing is possible. With any large set of fields, there's often a lot of redundant fields where maybe only the name changes, so I wanted to be able to just copy and paste my way through these dozens of fields. That would save me a lot of time, relative to creating and configuring them interactively in the admin. Rather than spending a bunch of time trying to answer the specifications with existing field types, I ended up building a new Fieldtype and Inputfield to handle the task. Right now it's called the "Custom" field (for lack of a better term). But the term kind of fits because it's a field that has no structure on its own and instead is defined completely by a custom JSON file that you place on your file system. (It will also accept a PHP file that returns a PHP array or InputfieldWrapper). Below is an example of defining a custom field named "contact" with JSON. The array keys are the field names. The "type" can be the name of any Inputfield module (minus the "Inputfield" prefix). And the rest of the properties are whatever settings are supported by the chosen Inputfield module, or Inputfield properties in general. /site/templates/custom-fields/contact.json { "first_name": { "type": "text", "label": "First name", "required": true, "columnWidth": 50 }, "last_name": { "type": "text", "label": "Last name", "required": true, "columnWidth": 50 }, "email": { "type": "email", "label": "Email", "placeholder": "person@company.com", "required": true }, "colors": { "type": "checkboxes", "label": "What are your favorite colors?", "options": { "r": "Red", "g": "Green", "b": "Blue" } }, "address": { "type": "fieldset", "label": "Address", "children": { "address_street": { "type": "text", "label": "Street" }, "address_city": { "type": "text", "label": "City", "columnWidth": 50 }, "address_state": { "type": "text", "label": "State/province", "columnWidth": 25 }, "address_zip": { "type": "text", "label": "Zip/post code", "columnWidth": 25 } } } } The result in the page editor looks like this: The actual value from the API is a WireData object populated with the names mentioned in the JSON definition above. If my Custom field is named "contact", I can output the email name like this: echo $page->contact->email; Or if I want to output everything in a loop: foreach($page->contact as $key => $value) { echo "<li>$key: $value</li>"; } After defining a couple large fields with JSON, I decided that using PHP would sometimes be preferable because I could inject some logic into it, such as loading a list of 200+ selectable countries from another file, or putting reusable groups of fieldsets in a variable to reduce duplication. The other benefits of a PHP array were that I could make the field labels __('translatable'); and PHP isn't quite as strict as JSON about extra commas. So the module will accept either JSON or PHP array. Here's the equivalent PHP to the above JSON: /site/templates/custom-fields/contact.php return [ 'first_name' => [ 'type' => 'text', 'label' => 'First name', 'required' => true, 'columnWidth' => 50 ], 'last_name' => [ 'type' => 'text', 'label' => 'Last name', 'required' => true, 'columnWidth' => 50 ], 'email' => [ 'type' => 'email', 'label' => 'Email address', 'placeholder' => 'person@company.com' ], 'colors' => [ 'type' => 'checkboxes', 'label' => 'Colors', 'description' => 'Select your favorite colors', 'options' => [ 'r' => 'Red', 'g' => 'Green', 'b' => 'Blue' ], ], 'address' => [ 'type' => 'fieldset', 'label' => 'Mailing address', 'children' => [ 'address_street' => [ 'type' => 'text', 'label' => 'Street' ], 'address_city' => [ 'type' => 'text', 'label' => 'City', 'columnWidth' => 50 ], 'address_state' => [ 'type' => 'text', 'label' => 'State/province', 'columnWidth' => 25 ], 'address_zip' => [ 'type' => 'text', 'label' => 'Zip/post code', 'columnWidth' => 25 ] ] ] ]; The downside of configuring fields this way is that you kind of have to know the names of each Inputfield's configuration properties to take full advantage of all its features. Interactively, they are all shown to you, which makes things easier, and we don't have that here. There is yet another alternative though. If you define the fields like you would in a module configuration, your IDE (like PhpStorm) will be able to provide type hinting specific to each Inputfield type. I think I still prefer to use JSON or a PHP array, and consult the docs on the settings; but just to demonstrate, you can also have your custom field file return a PHP InputfieldWrapper like this: $form = new InputfieldWrapper(); $f = $form->InputfieldText; $f->name = 'first_name'; $f->label = 'First name'; $f->required = true; $f->columnWidth = 50; $form->add($f); $f = $form->InputfieldText; $f->name = 'last_name'; $f->label = 'Last name'; $f->required = true; $f->columnWidth = 50; $form->add($f); $f = $form->InputfieldEmail; $f->name = 'email'; $f->label = 'Email address'; $f->required = true; $f->placeholder = 'person@company.com'; $form->add($f); // ... and so on Now that this new Fieldtype/Inputfield is developed, I was able to answer the clients specs for lots of fields in a manner of minutes. Here's just some of it (thumbnails): There's more I'd like to do with this Fieldtype/Inputfield combination before releasing it, but since I've found it quite handy (and actually fun) to build fields this way, I wanted to let you know I was working on it and hope to have it ready to share soon, hopefully this month. Thanks for reading and have a great weekend!

- 17 replies

-

- 20

-

-

-

@bernhard That sounds good to me. Probably out of scope relative to our resources in the short term, but longer term this sounds like the ideal. This is one of many reasons why I like to get a new main/master version out, but it's probably not often enough. Maybe one way to keep the main branch fresh for folks looking at the date you pointed to is if we maintained a changelog type of file that covered all the versions, including the dev branch versions. This changelog file would exist on both branches and get merged to the main/master branch every time the version number was increased, regardless of branch. Also a good point about the README links. That makes sense that those links should move closer to the top. Maybe at the top it should also mention that there are new dev branch versions at least every month. Good ideas. Growing the user base is good for everyone. Good for us and the community, and good for those new users who get something great they didn't know about before. I think I mentioned "overhaul of this website" in the message that started this thread. We are ready for a redesign after 7 years no doubt. Ideally I'd like to have a pro design it, someone like @diogo or one of the other great professional designers in the community, like those you mentioned. I've thought a redesign could be both of the website and the admin, and that perhaps they even use the same design/theme. I have some rough ideas about the design concept but I'm not the right one to design it. It's definitely not a default Uikit theme by any stretch, but if that's the impression you get, then understood. One thing that looks dated is the computer on the homepage, it's still my 2017 iMac that I use everyday, so my computer is a bit outdated too! But until we have a new design in place, I've thought I should just remove that computer and leave the text. Something like this might be good at least for instances where someone wants priority attention to one thing or another that might not otherwise get priority. I focus largely on issues that are easily reproducible and affect many people. If an issue only appears naturally in very rare instances and is a simple matter to work around, or if it's difficult to reproduce (requiring a lot of set up or combinations of factors), then I try to delay those until time is more abundant. I can easily lose days of work trying to identify and fix obscure issues that won't help many people. The reality is I love going down these rabbit holes, fixing things is actually quite fun, but I have to be careful and pick-and-choose or I'll lose valuable time with little to show for it. I love it! I do wonder if the modules showcase is relatable enough to people that don't already know PW, and thus may not know what modules are. Might also be confusing in the admin side, where there's also a Modules tab, and folks might get mixed up about what is what. But I like the idea of having a different demo site, regardless of what data it is showcasing. What about if we linked to off-site demos? Your demo for RockFrontend is fantastic and I like how it actually lets them edit everything and resets it every hour (is this really secure?). But I imagine you and others in the community could also build a great ProcessWire demo that we could link to. The win-win of it would be that we'd be sending you traffic and you could promote your tools in the demo as well. The same demo page on the ProcessWire site could link to the demo for RockFrontend, PAGEGRID, and others. Basically, a compilation of PW demos, rather than just one. I could also update the Skyscrapers profile and make that one of them, but even an updated one should probably be near the end of the list of demos at this point. Always a good idea. We kind of do that already in the About section, but it needs a content refresh. Also, having the year in there is a good idea since I think that's what people are searching for, and thus attracts search traffic. Good points. The "sell" part of it isn't really in my bloodstream, so as you say, it's something where we'd need experts. I was looking through your website the other day and actually think you do a great job of communicating and thereby selling your products and services. Your skills here also come across in your recommendations, thank you for all of the good ideas.

- 127 replies

-

- 10

-

-

@nurkka It works for me at least: $wire->addHookAfter('Pages::saved(toggle_checked=1, status<trash)', function($e) { $page = $e->arguments(0); $e->warning("Saved page $page->path"); }, [ 'priority' => 200 ]); Check if your checkbox field is configured to have a non-default value?

-

@szabesz I've thought Lexical looks like a really interesting project so have been curious about it. I can't even make past the first line of their quick-start work though, it gives me a JS error. I'm interested to see what the guy from BookStack comes up with. I'd also like to experiment with Lexical more. TinyMCE is providing custom open source licenses to projects like ours, and I have requested it from them here, but so far the responses I've received from them don't seem to be aware of any of it. They've been giving me prices for commercial licenses, so I'm kind of confused. There is a fork of TinyMCE 6 called HugeMCE that will apparently continue on the MIT license, but not clear how far that will go.

-

@jploch @bernhard Long term I think we'll want to make it possible for others to sell their commercial modules here. But short term, what else can we do to help? You've both written some amazing page building software that deserves a lot of attention. Would you like to do a blog post about about it here? Or should we maybe have section on the site that highlights these tools? Perhaps something on the download or modules page? Doing commercial modules is hard either way, for me too, but I think it benefits all of us to have these tools available. Let me know what I can do to help.

-

@theoretic It's actually the opposite of that. ProcessWire is an API and that's all it is. Any output comes from the user of the API and not from ProcessWire, as it doesn't output anything on its own. ProcessWire started as a headless CMS, before the term even existed. The admin was later added on as an application built in PW, and then grew from there. But the base of PW is still just nothing but the API. ProcessWire itself doesn't output HTML. But since most use it for that, all of our example site profiles output HTML. There could just as easily be a site profile that outputs JSON or XML, or whatever you want it to. The point is PW has always been separate from whatever you choose to output with it, so that it has no opinion about what you use it for. While we could have a site profile that outputs something other than HTML, I don't really know what we would have it output or who it would be for, but maybe someone else does (?), it would be a simple matter.

- 127 replies

-

- 13

-

-

@tb76 This stuff is kind of a pain to edit in IP.Board so I've tried to normalize it all to be easier to maintain and reuse. This means maintaining bodycopy and prices separately where possible. But it should state the optional yearly renewal fee once the item is in your cart.

-

Output logic is a part of the view. When I build a site profile to share with others my primary goal is to make it as simple and easy-to-follow as possible. For most websites powered by ProcessWire the template files are the views, and that's where I think most should start too, as it's very simple. As needs grow, many will naturally isolate the views to reusable files separate from the template files when it makes sense (like that parts directory in the invoice profile). But I think it's good for PW to be less opinionated about that because people may be using different template engines, different output strategies (direct, delayed, markup regions), etc., or they may not even care about following an MVC pattern, even if PW naturally leads there. This pattern was around before we had web sites/applications, so the "view" part is not like it was when we were building desktop applications in the old days with Borland C++. With HTML we've got server side markup and the additional layers of client side CSS and JS. Not everyone always agrees about where to draw the lines and it can depend on the case. I don't think we should decide that for people and I think it's good to be flexible on on this part of it.

- 127 replies

-

- 13

-

-

-

I don't think you need a hook. Just use /site/templates/admin.php, and narrow in from there. For instance, if you want to limit to logged in user then if($user->isLoggedin()) { ... } or if you want to exclude ajax requests then if($!$config->ajax) { ... } I'm not sure I fully understand the second scenario you mentioned. But I think you'd have to decide more specifically what this means: "first time user opens the admin while session is still valid from last time". For instance, maybe you want to correspond with a period of time, such as 10 minutes? $delay = 600; // i.e. 10 minutes (600 seconds); $thisTime = time(); $lastTime = $session->get('lastTime'); $session->set('lastTime', $thisTime); if(!$lastTime) { // this is the first request of the session } else if($thisTime - $lastTime > $delay) { // last request was MORE than 10 minutes ago } else { // last request was LESS than 10 minutes ago }

-

@Jonathan Lahijani Preferably use require_once() or include_once(). But if you prefer autoloading, you could add this in your /site/init.php if you wanted to autoload any classes in /site/classes/ that ended with "PageHooks": $classLoader->addSuffix('PageHooks', $config->paths->classes); ProcessWire's class autoloader is more efficient than most, but if you already know a file exists and where it is, I prefer to require_once(). It's more efficient and more clear, nothing hunting for files behind the scenes. There's a reason why this file exists in ProcessWire, even though all of those classes could likely load without it. It makes the boot faster. Class autoloading is obviously necessary for many cases, especially if working with other libraries. But for a case like the one mentioned here, skip the autoloading unless you feel you need it.

-

@Jonathan Lahijani I'd need specific examples to respond to or questions to answer. This is a fully built out profile. I think some might expect that I would classify/objectify everything, but I don't do that unless there's a strong reason for doing so. I use classes when there's an OO reason for doing so, and rarely is "group of related functions" one of those reasons, unless for sharing and extending. Using custom page classes or OOP is not at all necessary for most installations. It's only when you get into building larger applications that you might benefit from these things. And even then, it's not technically necessary. One good reason to use custom Page classes is just to have a documented or enforceable type, with no need to have any code in the actual class: /** * @property string $title * @property float $price * @property PageArray|CategoryPage[] $categories * @property string $body * @property Pageimages $photos */ class ProductPage extends Page {} If you add something to the class, it might be to add an API for the type's content that reduces the redundant code you'd need when outputting it. class ProductPage extends Page { /** * Get featured photo for product * * @return Pageimage|null * */ public function featuredPhoto() { $photos = $this->photos; if($photos->count()) return $photos->first(); foreach($this->categories as $category) { $photo = $category->photos->first(); if($photo) break; } return $photo; } } I'd like that too. Maybe "buy" is a longer term goal, but shorter term I'd be interested in suggestions for how best to help promote others modules. @bernhard There's no need to use OOP in ProcessWire, especially for someone new to it. ProcessWire does all the work to make sure that by the time your template file is called, you are output ready. So it's only once you get to be more advanced that you might then start using hooks, and you might never use OOP. If someone wants to add a hook, the most reliable place for that is the /site/ready.php file, or in some file that you include from it (conditionally or otherwise), and that's what I'd recommend. ProcessWire wouldn't even load your ProductPage.php file if no instance was created. But even if it did, why would there be a need for any hooks related to page class "ProductPage" if no page of the type is ever loaded? Maybe I'm missing something. You already know I'm skeptical of hooks being added by Page objects, but now we're talking about hooks that aren't even related to the page class being within it? ? If there are, they still wouldn't be called unless something triggered PW to load the ProductPage.php file. And the primarily reason it would load the file is to create an instance of the class. That makes sense. For most I'd suggest this: $wire->addHook('Pages::saveReady(template=product)', function($e) { // ... }); Or this if you are using custom page classes, and want to capture ProductPage and any other types that inherit from it: $wire->addHook('Pages::saveReady(<ProductPage>)', function($e) { // ... }); If conditional for the requested $page, then wrap the call in an if() if($page->template->name === 'product') { $wire->addHook(...); } If you need to hook the same method for multiple types, it can be more efficient to capture them all with one hook. Maybe you want it to call a saveReady() method on any custom Page classes that have added it. Since you wanted hook type code in the Page class, here's how you could do it without having hooks going into the Page class: $pages->addHook('saveReady', function($e) { $page = $e->arguments(0); if(method_exists($page, 'saveReady') $page->saveReady(); }); Rather than more initialization methods, if you needed hook-related implementation in the custom Page class, the above is the sort of thing that I think would be better. At this point, it's only about being "ready to save THIS instance of the page" which I'd have no concerns about. I could even see the core supporting this so that you could just add a saveReady() or saved() method to the custom Page class, and neither you or the core would even need to use hooks. But back to hooks, if you don't want to load up your /site/ready.php with code, maybe just use ready.php as the gatekeeper and organize them how you want. If you only need hooks for the admin, maybe put them in /site/templates/admin.php. If you want to maintain groups of hooks dedicated one type or another, put them in another file that you include from your ready.php file: include('./hooks/product.php'); I understand you like to maintain these things in or alongside your Page class files. Your MyCustomPage.php class file is one that might be suitable because those hooks won't get added unless an instance of the class is created, or something does a class_exists('ProcessWire\ProductPage'). I think you did something like the following in an earlier example, and it's just fine because it's independent of any instance and doesn't add pointers into any specific instance, and will only ever get added once: class ProductPage extends Page {} $wire->addHook(...); Or maybe you want the hooks in a separate related but dedicated file. It would have the same benefits as the previous example, but isolated to a separate file: class ProductPage extends Page { public function wired() { require_once(__DIR__ . '/ProductPageHooks.php'); parent::wired(); } } Maybe you want to do it for all classes that extend BasePage.php. Or you could use a trait, but here's an example using inheritance: // site/classes/BasePage.php class BasePage extends Page { public function wired() { parent::wired(); $f = __DIR__ . '/' . $this->className() . 'Hooks.php'; if(file_exists($f)) require_once($f); } } // /site/classes/ProductPage.php class ProductPage extends BasePage {} The word "trivial" means "of little value or importance" and I wouldn't agree with that classification of these files. But I think you might (?) mean that it's really "basic/simple" (which is meaning I think of sometimes too), and that's actually the goal of it, so I would agree with that classification.

-

@cwsoft These methods don't make sense in the core, unless they are to initialize each individual instance of a Page class. But Page classes (like all objects in PW) already have methods for that: __construct() and wired(). As I understand the methods requested here, they are independent of Page instance, so honestly don't belong in a core Page class, and are not something that I think the core should suggest. See what I wrote above for why. Though they are trivial to implement on your own with a static variable should you want it. I'd still advise against it, especially for hooks, but here's an example: class MyPage extends Page { static private $ready = false; public function wired() { parent::wired(); if(!self::$ready) { // your init code... self::$ready = true; } } }

-

@bernhard Good questions and I'll answer according to the design of the core. These considerations are rigid when it comes to what the core does with these Page classes, but flexible outside of that. You are using Page classes in a way that gives them multiple roles. Whether that's good or not depends on your architectural point of view. But it sounds like you are happy with how it's working, and you've got a well defined strategy, and this is valuable. So none of this may useful for your case, so keep that in mind. That sounds okay to me. Why does it load an extra 10-20 pages? Or does that just depend on what pages are used in the request? As long as that magic page interface isn't increasing resource usage each individual Page object significantly, then it should scale just fine. It shouldn't be related to either. It should be independent of environment. A page is a type that aggregates content for a location in the tree. The problem with having front-end-only or admin-only stuff in a Page object is that it's code in the page that steps outside of its role and applies for one environment and not another. It can be seen as a type of bloat, turning a Page object into a jack of multiple trades. If you've got significant code that's just for one environment or another then the idea is that it's better to keep it separate from the Page. Perhaps delegated to a different class like my earlier example (or even a function outside of a class). But if it's just for a one-off case or something then I wouldn't bother. Take that getPageListLabel() method, that's something to represent the Page in the admin only, which is why it makes me uneasy. It's a tradeoff. It's a one-off case, so I think it's okay as long as we don't add more like it. Potentially the method might have front-end value in some cases too, so maybe that's another reason it's an okay compromise. But if we found ourselves starting to put a lot of admin-specific methods in Page classes then those would be better in a separate supporting class or function, or better yet, decouple it from the class entirely. That way it doesn't add code to the page class for something that goes outside its responsibility. Another point is that often code added for one environment or another will be the same between different page classes. To avoid repeating yourself, you'd have to repeat the same methods in multiple classes, or more likely add complexity with more levels of inheritance or traits to share that code. Moving that code to a dedicated class makes it more contextual, maintainable and extendable across multiple classes. There's that word "things" which seems like it might mean "everything" in this context. Here we're treating the Page like it's a Swiss Army knife for the type, rather than the type itself. It's like being the car and the carwash, the lawnmower and the grass, or not just the ball but the bat and glove too. That editForm() method looks like it's got the Page class now involved in manipulating forms in the admin. In that case Page is not just a type representing an aggregate of content, but also a form editor, adding application logic, giving the Page class multiple unrelated responsibilities. Some responsibilities which apply to all instances of the page and others which might only apply to one instance on occasion. If the Page must be the gatekeeper for multiple responsibilities, the idea is that it would be preferable that it delegates those responsibilities rather than implements them itself. That would enable it to provide that interface without expanding the internal role of the class. If you find benefits in using them differently then I'm not suggesting you change anything. Since you asked I have to give you an accurate answer of what Page classes are originally designed for and what I consider best practices around them. These are how the core considers them and doing likewise ensures things stay simple, efficient and scalable in the long term. But in PW flexibility is more important than rules and this is also true of Page classes. There's more than one way to do things well, I like your creativity and if you've found something that works well for your projects over time and scale then stick with it.

-

FYI the ProFields Table field does this quite well, and I'm currently using it for this. You could also do the same with a repeater, but Table would do it more efficiently. If you need 2 dates (like a range) then also note there is FieldtypeDateRange/InputfieldDateRange available in ProFields as well.

-

Thank you for all the feedback, I'm enjoying reading it and it's opening up a lot of good ideas, so please keep it coming. I also have some ideas to add. With regard to big/major features, I'd ideally like to focus on things that absolutely must be in the core, and not on things that can be built and supported by others as modules. My wishlist would be that ProcessWire focuses more on its roots, being the best it can be as a framework and CMS for running modules. If I can focus on that part of the core, and let others develop major new features with modules, then not only would the core be that much better, but I think I'd have the bandwidth to do things like develop a more formal testing framework, make the docs that much better, have more time for issues and requests, etc. Things like media/asset managers and web/block builders/editors (as examples) are best built by those that use them. When you are both the user and developer, you can develop and support something better than if you hired someone to develop it. @bernhard is a great example, he has stepped up and built answers to several things that people ask for, and he's both developer and user of those things. I understand some of these external modules cost money. But when something doesn't cost money to you, it has still cost money and time resources from someone else, the developer, or whoever sponsored the developer. So it's a question of who pays for it and how they are doing so. My strategy here has been to focus on developing things that I can use in my projects and very often the clients help cover the cost. That's how much of ProcessWire has been built. Other features that I use but could not afford to develop and support for free have been developed into Pro modules. This helps fund the core too. I don't make enough to survive on that alone, so I do a lot of client work as well, and I like the diversity of work. When I say that one particular feature is not likely to be developed in PW's core in the next version, I'm not saying that it's a bad idea at all, nor am I rejecting it, but more likely that I don't have the ability to fund that feature at the moment. (But maybe will later). Or in many other cases, it may be that a particular feature might only be useful in some installations rather than most, and thus belongs as an external module. If something like RockPageBuilder or PageGRID or others could suit your needs on a particular project, support the developer and get it. The more we can do this, the more that developer can do, the more decentralized the responsibility for ProcessWire can be, and the more I think it benefits not just that module and developer, but the entire ProcessWire project. Maybe the developers of these modules would be open to having a free version with less features so people could get to know them better and upgrade when they need it. Kind of like how there is Lister and ListerPro. One idea I'd like feedback on. What if ProcessWire 4.x would be just the core and only the modules essential for the "blank" profile? And that's all the core would be. Then, on the download page (or maybe the installer), you could select all the modules you want to be part of your installation. For instance, you know you'll need repeaters, so you check the box for the Repeater Fieldtype. You know you want Tracy, so you check the box for that. At this stage you could select both 1st party and 3rd party modules to basically configure your own ideal version. From here we could also highlight other options like the Page Builders mentioned. There would have to be some predefined options, like a "well appointed" installation that is the same as the core as it is now. It would be a whole different way of building ProcessWire, but much more back to the roots of PW, becoming even more of a community project, sharing the responsibility a little further and getting more people involved, growing the family.

- 127 replies

-

- 17

-

-

-

@Jan Romero No hacks are necessary and it's actually much simpler than I think you might realize. The row pagination essentially works the same as with pages, and you can override the current pagination in the same way (via start and limit). The only difference is where you call it from. Why don't you open a topic in the ProFields Table support board? I'm happy to answer any questions you have.

-

Elementor is not part of Wordpress, but a plugin developed by someone else to run in WordPress. Maybe this is an opportunity for someone in ProcessWire. And there are already RockPageBuilder and PageGRID in ProcessWire to consider as well. The dominance of WordPress has always been the case, and it will likely continue to be the case. ProcessWire is unique and has always been something completely different from WordPress. The CMS projects that are trying to mirror WordPress never seem to last long because, if you want something like WordPress, then why not just use WordPress? Though I don't know anyone that really knows both well who prefers to develop in WordPress. Instead, WordPress dominance is largely a matter of name familiarity with clients, which is completely understandable. But there are also several things it genuinely does well, and I always believe in using the best tool for the job. If the types of projects you work on are a good match for WordPress, then great, but I hope you also have projects that are a good match for ProcessWire as well. ProcessWire fully supports it. Many (most?) of us is have used exclusively on new installations for years. I don't know what those are. it sounds like another opportunity for modules. But more info needed. Good idea. This is something I think I can do. Can you describe further? In the majority of cases, older modules will still work fine. I'm not aware of anything in the modules directory that does not work, but if any become apparent I will remove them. Maybe it would be a good community effort to identify modules that could be removed from the directory. I think this might turn off as many people as it turns on. I know I wouldn't like it. But if it can be automated as an installation option then sure, why not. ProcessWire already supports WEBP and has for awhile. I was working on core AVIF support, but Robin S. released an AVIF module so I put mine on the back burner. And this may be the sort of thing that is better as a module, or maybe it'll be better in the core at some point, I'm not sure. Right now we don't build core versions in a way that breaks things from one to another. At least not in a way that is known at the time of assigning a version number. When that changes, I think we'll want to adopt semantic versioning, and maybe that's a part of 4.x. Maybe it would benefit 3rd party modules more in the shorter term, so it's worth looking at there first. Sounds interesting but I need more details. If there's anyone that is an nginx expert that wants to step up to have this responsibility I'd welcome it. I've mentioned this before too, but nobody has stepped up. I'm not an nginx expert so don't feel qualified to support it on my own. For real? This is the first I've heard of any chaos. Good to hear. We do have the PageAutosave module which does Live Preview even better than ProDrafts. This module is available in ProDevTools or ProDrafts boards, but maybe it can have a wider release. This is exactly the sort of thing that PW is great at supporting already. But I have been exploring building an everything-SEO module here, as it's something I'd like to use and support, but just not as part of the core. The hard-core SEO experts will still want to do their own SEO stuff like they do now, but for those that want something they can turn-key and have it all ready, a module is ideal. I'll very likely be building one within the next year hopefully.

-

ProFields Table gets a ton of love, perhaps the most of any module I develop currently, as I work with in every single day. I looked at all of the threads you linked. The first linked thread has been waiting for your reply for awhile, and the last one is already in the GitHub queue. In the others, I'm seeing feature requests as well as some back-and-forth between us about one behavior or another, but none appear to be actual bugs. If you disagree on one or another, drop a reply to bump it in the relevant thread. I try to focus on features that will benefit the largest audience. Following that I do try to add support for new selector features and things of the sort you've mentioned. Sometimes folks ask for things that might take more time than I have to implement and might only be used by one person, so I have to be selective in what gets added when, whether in Table, the core or any other modules. Generally if someone needs one particular feature and it won't take a lot of time to implement then I do try to implement when the request comes in. If I've not yet implemented something you want then it's more than likely just a matter of not having the time to do it immediately. That doesn't mean I don't love you, it just means I'm working within the resources I have.

-

@szabesz I think the phrase "everything necessary" might be worth considering here. A class ideally just has one responsibility. Page instances are there to provide an interface to one page's content and be swapped in and out of memory on demand. When there are things like hooks going into a Page, that changes the role completely. If you start doing this a lot on a large site then it may affect scalability. Maybe it's fine for one individual installation or another, however. In your case, this isn't an issue, as you've solved that concern with a static method. I think that's okay for an individual installation, but it wouldn't be okay for PW to suggest for everyone because it's not multi-instance safe. The core avoids also static methods when possible because they are disconnected from any instance of the class. When there are static methods, it's always worth looking closer if it's worth the convenience (sometimes it is) or if they would be better off somewhere else. Using hooks in your /site/init.php, /site/ready.php or /site/templates/admin.php (when appropriate) is a safe strategy, but I agree it's not great if you have a ton of them. At that point it might be good to have an autoload Module, or to start including your own files from one of the previously mentioned ones. But if you find it beneficial to use /site/classes/YourPageClass.php then also consider a separate class (ProductPageTools.php?). Rather than having a static method, you can have a static variable in your ProductPage class that refers to that instance, but there will only ever be one of them, and it will be multi instance safe. Page classes already do this for a lot of things, delegating to other classes (PageTraversal, PageValues, PageProperties, etc.) for code that can be shared among all Page classes. There's only ever one instance of those classes, no matter how many Page class instances there are. And they are all multi-instance safe. This also keeps the memory requirements of the Page classes very low, since the Page class has very little code itself, and mostly delegates to other classes where the same single instance operates on all Page classes. class ProductPage extends Page { static private $tools = null; public function wired() { parent::wired(); if(self::$tools === null) self::$tools = $this->wire(new ProductPageTools()); } } class ProductPageTools extends Wire { public function wired() { parent::wired(); $this->wire()->pages->addHook('saveReady(template=product)', $this, 'hookSaveReady'); } public function hookSaveReady(HookEvent $e) { // ... } } Maybe you have a method with a lot of code that you want to be callable from ProductPage (any number of instances) but maintain in ProductPageTools (only ever one instance). This is how the core delegates to other classes and limits the scale and scope of classes that may have many instances. class ProductPage extends Page { // ... public function foo() { return self::$tools->foo($this); } } class ProductPageTools extends Wire { // ... public function foo(Page $page) { $result = ''; // A whole bunch of code to generate $result from $page return $result; } } If the strategy you are using now works well for you then I'm not suggesting you change it. But just wanted to point out the strategy I'd suggest as a scalable and multi-instance safe one that also maintains separation of concerns and keeps the Page class from becoming complex or heavy.

-

@Ivan Gretsky @cb2004 @David Karich Sorry, I must not have written it very well. I'm tired, but wired from coffee at the same time, maybe not a good combination. In any case, I'm enthusiastic about most of the suggestions, even if I can't implement them all. I've updated the original post to just summarize the main points instead.