-

Posts

126 -

Joined

-

Last visited

Everything posted by muzzer

-

Thanks for this guys. Soma, your results are more like what I would expect. Some older (but comparable) modx sites I'm running on the same server are loading in under 100ms. WillyC, benchmarks are very simple, using php microtime to measure the various segments from the beginning of the index.php file through to the end of rendering the page footer. No multi language Have just uninstalled a handful of unused modules (editors, module manager etc,all backend/admin modules) so only have a barebones install. Startup is now between 200-300ms so seems to be an improvement (and entirely acceptable to me). I have not developed modules so don't know how they work but assume any admin modules should only load on backend pages? I'll need to go back over this and see if it is indeed modules that are the problem but I would not have thought so. Still, I wonder why my modx sites are so blazingly fast compared to PW sites, even static (no api calls) pages. I know PW is more advanced and offers much more than modx so some expected overhead there, but it does seem a little out of proportion. Don't get me wrong, I'm not complaining, lovin' PW

- 20 replies

-

- cache

- markup cache

-

(and 2 more)

Tagged with:

-

Thanks for the suggestions Jonathan. I'm not concerned so much with bottlenecks in my code as I know I can optimise these in due course. Although if the page is taking 700ms to load and 600ms of that is PW startup there would not appear to be a lot of room to optimise my code! I am more concerned about the PW setup time (600ms) which I don't think I can get around without more severe caching or cloudflare etc. And I'm not wanting to go down that track as pages are constantly changing (600+ users with ability to update site data makes page caching difficult) and I have quite a bit of page data tracking which I would lose (to my knowledge) if utilising cloudflare (unless I handled this with javascript which I'm not keen on). What I was hoping for was an idea from other v2.4 users if the 400ms-600ms benchmark shown above for pre-template parsing was normal, if removing modules would improve this at all, and any other way I might improve this. Cloudflare may end up being an option I need to look at more closely. Thx for all input/suggestions thus far guys

- 20 replies

-

- cache

- markup cache

-

(and 2 more)

Tagged with:

-

@teppo, Oops, thats a formatting error, those figures are seconds, so .602ms should read .602s, or just over half a second, as should all the other timings. This is a top end figure, more often than not it's around 300ms. @cstevensjr, thanks for the links, good read. The timings however are not latency, rather php processing times. Sorry, should have made that clear.

- 20 replies

-

- cache

- markup cache

-

(and 2 more)

Tagged with:

-

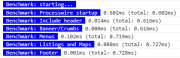

Playing around with the markup cache on a website which is listing accommodation/activities. The page in question displays a number of listings (info about accommodation operator) and then a google map with markers for all the listings. I've cached (using markup cache) each listing, and also the google map markers, and the performance boost from this is pretty damn good, I'm stoked with the result. In the process I've got a bit obsessed with page-load speed and done some benchmarks. the following image is a sample page-load. The troubling aspect is time PW takes to get up and running. As you can see from the benchmarking info the vast majority of page-load time is PW startup - that is everything prior to the page template - ie, the time is measured from the start of index.php to the start the the page template file. Is this normal? This is on a live server (shared host) and the value varies from 400-600ms. Do installed modules have any impact on this? Is there any way to speed this up? It's reasonably snappy but if this can be improved then I'm all for it

- 20 replies

-

- 1

-

-

- cache

- markup cache

-

(and 2 more)

Tagged with:

-

Exporting localhost database into a new database throws error!

muzzer replied to n0sleeves's topic in General Support

Can you post the first page of the SQL you are trying to import -

Wow, this looks sharp! I'll definitely need to check this out this week, I'm not a blogger but this makes me want to be one Well done, superb work kongondo.

-

All together I did had valuable feedback from Nik @bwaked:Respect to Nik, but I think the most valuable advise here is from cstevensjr. I also don't wish to sound rude so please don't take this as so; When other users take the time to reply to your questions you need to do likewise - take the time to study their responses before plunging onward. I have been guilty of the same myself at times, but have bben around this community a little longer than you and have come to realise there is an immense amount of knowledge among members - more-so than most communities - and usually their answers are spot-on so long as the question is clear and precise. Sometimes it just takes a little extra study of their responses to understand them. You will get a much more positive response from members if you treat their responses like the gold dust they usually are Onward bro....

-

ProcessImageMinimize - Image compression service (commercial)

muzzer replied to Philipp's topic in Modules/Plugins

Hey Phillip, Now that's starting to sounds like a seriously useful module. Yes, I'm happy to help beta-test if you need more testers, otherwise I'm in no rush and will wait for the release, I've got other jobs on for the next few weeks so no biggie. One click solution sounds sweet, will there be options to turn this on/off per image field? -

ProcessImageMinimize - Image compression service (commercial)

muzzer replied to Philipp's topic in Modules/Plugins

Hey Philipp Tested this just now and the results are good, eg 150k files down to 110k etc with almost no effort on my part, other than adding ->mz() to the image resize code - easy to setup and use. Beautiful. Big issue was page speed - which is ironic as speeding this pageload is the purpose of the module. A small image gallery page took 27 seconds to load the first time - I know, this is an extreme case, minimizing all the images on the page at once - so time-lag this is to be expected, but it does reinforce the need for the minimizing to be performed on upload (or perhaps asyc), not on pageload. I can see the use for this module - I would use it on more image-heavy sites, especially image galleries, but the minimizing on pageload is a major gotcha for me. Cheers -

@teppo I read your post a while ago but could not remember where to find it again so thanks for that, very nice writeup on caching and also good info there on hooks too for anyone looking for a clear explanation on this. Nice. I'll look into fieldtype caching. There does not seem to be much mention of it anywhere - googling it only returns a few vague bit and pieces (expect your own post here, which gives a pretty good overview of it I think). I'll give it a tryout anyway, sounds like it might be an option. @wanze Thanks for this suggestion. You are right, I'm calling find twice which is stupid so I'll definately alter this. The timing tests I did showed the sluggishness was due to the actual retrieval of individual field data rather than the fetching of the page objects (which was fast) so I don't expect it to make a huge difference, but any parts which can be optimized I'll look at, so this is a helpful suggestion.

-

Okay, I've done some timing tests and am amazed at the speed PHP logic is processed. Not that that's relevant in the case. The parts that are slowing the page down are the retrieval of PW page fields. I will abbreviate; The site lists accommodation houses. The page I'm testing has brief listing info on 52 houses (I know, I could/should paginate but don't want to in this case). Each brief listing echos out or tests about 30 fields from a PW page. So (simplified) for each of the 52 listings: function generateListing( $id ) { $html = ''; $page = wire('pages')->get('/listings/')->find('listingId='.$id )->first(); $html .= $page->listingName; $html .= $page->postalAddress; if( $page->streetAddress=='') $html .= $page->streetAddress; // and a heap more of these type of statements with various formatting/logic return $html; } Each $page->field request timed as follows.... $t = microtime(); $html .= $page->streetAddress; debug(microtime()-$t); .... comes in at approx 0.0015sec (1.5ms). Doesn't sound like much but do this 30 times for each listing and then 50 listings = 30 * 50 * 0.0015 = 2.25 seconds = sluggish. So I'm assuming the 0.0015sec must represents a mySQL query - are the field queries done as required rather than when the $page object is created? If so, is there any way quickly query all page fields into an array in one swoop? I'm guessing the reason modx is so much quicker in this case is because all the fields are in one table, so one "select" query pulls all 30 fields into a PHP array, whereas with PW I guess there are 30 separate queries happening here, one for each field?

-

Thought I would share the following experience here for anyone interested in caching in PW. Converting a site from modx (Evo) to PW and finding some interesting performance issues. One page which pulls in content from 50 other pages ran really well under modx, page load (uncached) was around 450ms (yeah, not that fast but a lot of database action and formatting happening there). Converted the site to to PW and it took a massive hit, loading in around 3200ms. I was surprised as I always though PW was supposed to be lightning fast. It's possible (probable?) I'm doing something with $pages which is not well optimized, not sure at this stage but will look more into this when I get a moment. Anyway, I found two parts which were taking the longest to process and coded MarkupCache to these areas. Never used it before but found it very easy and nice to work with and just works beautifully, superb, all credit to the developer. The result of this quick bit of optimising was a page load speed reduced from 3200 to around 600ms. Impressive. And so easy to manage. While on the topic; There are several options for caching in PW, including template caching, markupcache, and procache. There's bit's and pieces on the forums about each, but is there a writeup somewhere summarising pros and cons of each? I was thinking of trying out template caching or even better ProCache but the site is probably too dynamic. Analytics, counters, live stats on the pages, etc. so I'm thinking it will be not so good yeah? Any advice welcome.

-

OK, thanks again Apeisa, that's one direction I didn't even think of looking in. There are a few other cases of definite identical problems on Win, so I'm putting it down to being a Win issue for now. Once I have hosting setup on a linux server I'll have another look at it. Perhaps it's time to re-dirch Windows and reinstall Linux Mint. Oh why did I ever leave you Minty

-

Thanks Apeisa, yeah, permissions and diskspace was the first thing I checked. Next was trying different files and filesizes ranging from 1-9Mb. All would work more often than not, probobly 30% of the time they failed. Tried multiple browsers, cleared cache and cookies etc.... It's odd because it copies and resizes the file ok, which reinforces the problem not being permissions or lack of memory yeah?, it's just the rename that fails. The filenames are all regular characters.

-

A full sample error message: rename(C:/wamp/www/testdomain/site/assets/files/3077/blenheim.172x129_tmp.jpg,C:/wamp/www/testdomain/site/assets/files/3077/blenheim.172x129.jpg): Access is denied. (code: 5) - /testdomain/wire/core/ImageSizer.php:401 It seems imageSizer takes "image.jpg", copies it to "image.widthxheight_tmp.jpg" file. It then resizes this file to the specified width and height dimensions and then renames it to image.widthxheight.jpg. It is the last part that is failing. As a side note; I'm using the above method as it appears there is no maxWidth and maxHeight parameters in the wireUpload class. I'm uploading images from the frontend rather then uploading images from the admin backend which I normally do and from where max dimensions can be specified - is this the standard method when trying to achieve a max image dimensions from a frontend upload? .

-

if( wire('input')->post->submit ) { $tmp_file = $_FILES['imageFile']['tmp_name']; $upload_path = 'c:/wamp/tmp/'; // new wire upload $u = new WireUpload( 'imageFile' ); $u->setMaxFiles( 1 ); $u->setDestinationPath( $upload_path ); $u->setValidExtensions(array('jpg', 'jpeg', 'gif', 'png')); // execute upload and check for errors $files = $u->execute(); if(!$u->getErrors()){ // create the new page to add the images $uploadpage = $pg = wire('pages')->get('/listings/')->find("listingId={$_GET['lid']}")->first(); $uploadpage->of(false); $uploadpage->listingImages = $upload_path . $files[0]; $uploadpage->save(); $uploadpage->of(true); $imagePg = $pg = wire('pages')->get('/listings/')->find("listingId={$_GET['lid']}")->first(); $img = $imagePg->listingImages->last(); $img->size(1024,768); } } The $img->size() function works most of the time but occasionally returns an error Rename: Access is denied. (code: 5) - /domain/wire/core/ImageSizer.php:401 where the result is a new file: "img.1024x768_tmp.jpg" but no "img.1024x768.jpg" Permissions and space are not an issue. The same file works one time and not the next etc, very irregular. It does this with several different files so no file corruption issues or anything like that. Anyone seen this before?

-

Hey Ryan, looks cool but I do wonder "Whats the point??" Google seems to be continually crawling the hell out of my sites and even sites with a massive number of pages deep in the tree are well crawled. I keep reading everywhere that I "should have" an XML sitemap to make crawling more effective, but no-one ever explains exactly how it will help when the sites are already well crawled. All I see is a out-of-date sitemap being potentially damaging. I know this thread is old and it's probably not the place to start a discussion on sitemaps, but it would be nice to have a definitive answer other than "because you should"

-

Thx Sinnut, thats a great link. I think I've pretty much nailed PDO basics today using various tuts on the net but the nettuts one is one of the better ones. You're right, it's actually pretty straight-forward, just a slightly different way of thinking to what I'm used to. Will bookmark the link for reference. CHeers

-

Thanks you Sinnut and Pete for absolutely awesome responses - covered absolutely everything I was unsure of with beautiful clarity. The prepared statements/PDO are new to me and there seems to be a lot of info on StackOverflow but oftentimes confuses rather than clarifies, but it's starting to make sense now. And of course now I can get my hands dirty thanks to the examples provided and hash around with PDO statements it should all become clear pretty quick I imagine. Can't emphasize enough the appreciation for the time you PW gurus put into providing such detailed information for newer users. Oftentimes all thats needed is a straightforward explanation on something seemingly simple and then everything just clicks and we're away - exactly what you've provided.

-

OK, so I'm starting to gel with the idea of fields = tables, pages = collection of several fields (tables) etc,. As you say Pete, it's a matter of unlearning what I'm used to and looking at things from a slightly different perspective. Using SQL I can select rows using "DISTINCT", for example if I have 100000 rows with different IP addresses, I can count all the distinct IP addresses in a table (or field) with a single SQL query in a split second. To my understanding to do this using the api and selectors I would need to foreach through every single page, which is mighty memory intensive and slow. Correct? If so, I still have a need for direct sql queries, even if using pages rather than custom tables. I've searched for examples of how to do these and gather the $database var is used, but there don't seem to be any clear examples of connect, insert, select etc using PDO.Can someone point the way if such exist? Do I need to "connect" or is a connection already available, is this what $database represents? I realise PDO is a recent addition so this maybe why I can't find an example of what I'm after. Any help appreciated

-

Hey diogo, thanks for that, This is it in a nutshell. I guess I'm kind of struggling to accept that pages could be used for such a thing as I've outlined above, and I'm sure I'm not the only ex-modx-er here feeling this, it just feels wrong. Under modx, using pages (or resources in modx-speak) for such a thing would be simply farcical. So accepting the idea of using pages in this fashion completely goes against the methods I've been using for years, and it's kinda doing my head in. Having said that, it is exciting to think PW can handle this, as like you say, it allows for the use of the API which is fantastic. You're right, there are posts in the forum regarding custom tables, which I have now found using Google rather than the forum search. Lesson learned. Cheers

-

One thing I love about PW is the built in variables ($pages etc) and selectors/methods which allow easy accessing of page data in the database. Another is the beautiful API which allows easy manipulation of page data. It's damn awesome I have to say. Unparalleled in any other system I've used. But there are times when I want to use a custom table rather than pages, for example, collecting stats or logging changes. One tourism site I have collects info on every booking/enquiry made from a tourism operators page, another logs every alteration a client makes to their particular page, and these tables may have several hundred thousand rows. I'm thinking a PW page representing every row is not ideal for this, and a custom table is more suitable. Please, shoot me down in flames if I'm wrong in this assumption - I would like to be wrong about this! Coming from a modx background: One thing about modx is that because it didn't have this type of system for selectors and sweet API you were forced to use more basic techniques for accessing the database. Eg. to select stats from my custom stats table relating to the site homepage and covering the last two days I might do this: $handle = $modx->db->select( '*', 'pagestats', "page='homepage' AND hours='48'" ); Can one you you pw gurus offer some pointers on basic database access in PW 2.4 with PDO? How to connect, insert a row, update it, even select rows like the above query. Or is this something that's "just not done", and "what the hell man, just use pages!"

-

What! That's already me on a good day

-

Thanks for the great replies all. No, the port is from modx Evo, not Revo. When Revo came into beta I had a play around and decided it was not my thing (and as pwired mentioned the admin was so damn slow) that's when I went hunting and was lucky enough to stumble on PW. Man, I actually enjoy doing websites again now Thanks for the explanation on the database Joss, exactly what I was looking for. I will get the site up and running and then look at template caching or ProCache.

-

Hells, that was quick guys, thanks for the advise. From a usability point of view I'm the only one editing and don't see this as an issue. I realise 1000 pages is nothing, it was more the amount of fields (tables) I was concerned with. Out of interest how is the page data collected when there are 60 fields (ie. 60 tables), is each individual table queried (60 queries?) or is there some cunning query magic going on here? Usability aside, what would be a practical limit for number of fields or is there essentially not one. For example (I know it's nuts but...) could it handle 1000 fields okay?