-

Posts

474 -

Joined

-

Last visited

-

Days Won

31

Everything posted by FireWire

-

RockIcons - Easily select from thousands of SVG icons

FireWire replied to bernhard's topic in Modules/Plugins

@bernhard I think you make a really good point and I could see myself using either sorting by name or folder for different projects. 100% agree on the backwards compatibility issue as well. I see the value in having it both ways and a setting would be really great! -

RockSettings - Manage common site settings like a boss.

FireWire replied to bernhard's topic in Modules/Plugins

@bernhardUnfortunately not yet, I have been jumping around on projects and haven't come back to it haha. -

RockIcons - Easily select from thousands of SVG icons

FireWire replied to bernhard's topic in Modules/Plugins

Made a tweak in the module that has helped, maybe a feature suggestion? ? I have two icon sets/folders that I'm using in folders called "material-icons" and "extras". The "extras" folder contain one-off icons that supplements material icons when needed. When selecting icons they are in order of the folder name which caused the 'X' social network in the "extras" folder to appear before icons with names beginning with 'a' in "material-icons". I added a little bit of code to sort by the name separate from their collection/namespace on line 34 when the values are rendered: <?php class InputfieldRockIcons extends InputfieldText implements InputfieldHasTextValue { // ... /** * Render the Inputfield * @return string */ public function ___render() { $module = rockicons(); // Added sorting by key/name $availableIcons = $module->icons(); uksort($availableIcons, fn ($a, $b) => explode(':', $a)[1] <=> explode(':', $b)[1]); // prepare icons $icons = ""; foreach ($availableIcons as $name => $path) { $selected = ($name == $this->value) ? "selected" : ""; // ... } // ... } Quick fix and the results feel more natural. RockIcons is another great module of yours I can't live without. Thanks for all of your hard work! -

Repeater fields in block migrations and duplicate template generation

FireWire replied to FireWire's topic in RockPageBuilder

@bernhard I took a quick look and understand what you mean. Thanks! -

Repeater fields in block migrations and duplicate template generation

FireWire replied to FireWire's topic in RockPageBuilder

@bernhard I've only experienced this when creating migrations inside block PHP files for RPB. I haven't looked but would there be something inside RockPageBuilder that filters block migrations before they're handled by RockMigrations? -

Repeater fields in block migrations and duplicate template generation

FireWire replied to FireWire's topic in RockPageBuilder

Can I get access to the repo? Can't guarantee I can tackle this right now, but when I can I'd be happy to work on it! -

Found something that may be of help to others. I have a RPB block that has a basic repeater field and uses a block migration. Rather than writing the migrations by hand, I've been copying RockMigrations code from fields to help make short work of writing migrations in block files. Example: Running migrations ended up causing a system template to be created every time modules were refreshed: I found the culprit in the block migration: <?php $rm->migrate([ 'fields' => [ 'listItems' => [ 'label' => 'List Items', 'type' => 'FieldtypeRepeater', 'fields' => [ 0 => 'text', ], 'template_id' => 0, // Potentially causes duplicate template creation 'parent_id' => 0, // Potentially causes duplicate template creation // ... ], ], // ... ]); Removing those two migration properties for that field resolved the issue. I'm not sure if this has any effect on other block migration field types since I haven't created too many in this project yet, but something to keep in mind when using the migration code output from an edit field page. @bernhard Is this something that an update in blocks where migration arrays could be "sanitized" to remove any properties that would conflict with how RPB needs fields to be created? Thanks for your work as always @bernhard!

-

Version 2.0 released. Please review the notes on upgrading from versions before 1.0.8 below. This version provides bugfixes and is recommended for everyone. In version 1.0.8 changes were made to how Fluency stores configuration data to address issues where ModSecurity rules may be tripped in some server environments. Despite my best efforts there have been issues experienced by some users when upgrading from earlier versions to 1.0.7 or earlier. They have been difficult to reproduce and fixes have worked in some instances but not others. Fluency has been bumped to version 2.0 since these the changes have proven to be breaking. If you are upgrading from any version before 1.0.8 to any version 2.0.0 or later it is highly recommended that you fully uninstall, download and move the new version to the modules folder, then reinstall Fluency. Upgrading from 1.0.8 or later does not require this step. There is no danger to your site's content but you will have to reconfigure the module. This includes choosing your Translation Engine, entering your API key, and configuring your language associations. If you don't have immediate access to the API key you have been using, temporarily copy/paste that key into a separate location and re-enter it after reinstalling the module. I'm not able to provide any support for upgrade issues since this is the identified solution as a preventative measure. In the unlikely event that you still experience issues you may need to check that all Fluency related database tables were removed when uninstalling Fluency. These are probably edge cases, but that is the surefire way to address any problems. Thank you for your patience, understanding, and helpful feedback!

- 220 replies

-

- translation

- language

-

(and 1 more)

Tagged with:

-

@nurkka I haven't thought of that situation before. That would take a whole different approach to translation by manipulating the language files directly because there's no way to get the translated strings from another language. There's an experimental feature in Fluency to translate any file or files in ProcessWire (core, module, template, etc.), unfortunately it only translates from the default language. It is possible to do what you're asking, but I am too overloaded with work right now to implement it. I do see the value though and would like to make that a part of Fluency in the future and the file translation that is already present would make it possible. If you wanted to experiment with bulk translations and still work with importing/exporting the JSON files, check out the $fluency->translateProcessWireFiles() method. Docblock has full usage explanations starting on line 494 in Fluency.module.php.

- 220 replies

-

- 2

-

-

- translation

- language

-

(and 1 more)

Tagged with:

-

- 220 replies

-

- 1

-

-

- translation

- language

-

(and 1 more)

Tagged with:

-

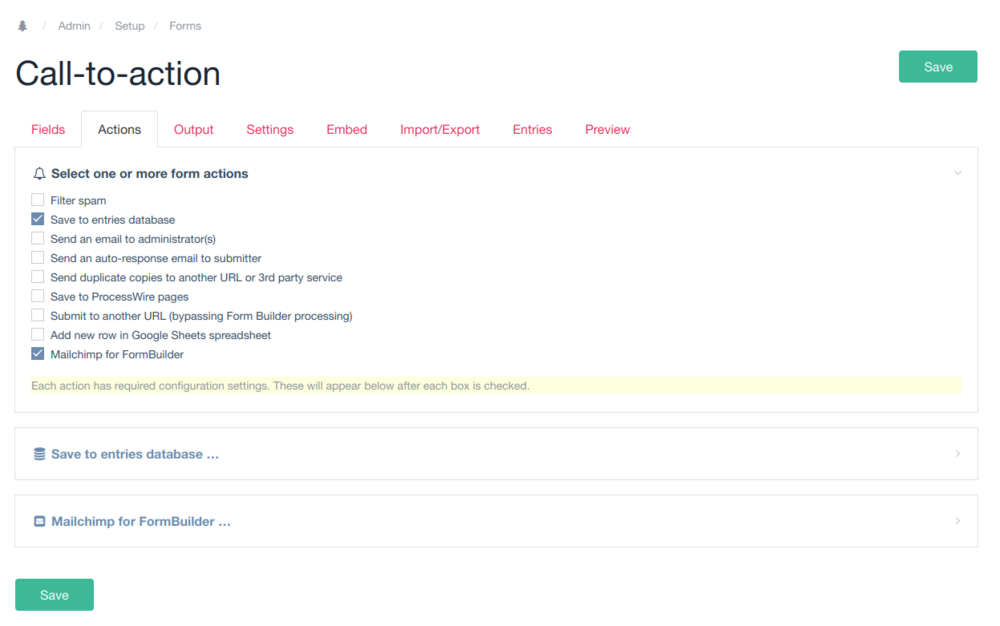

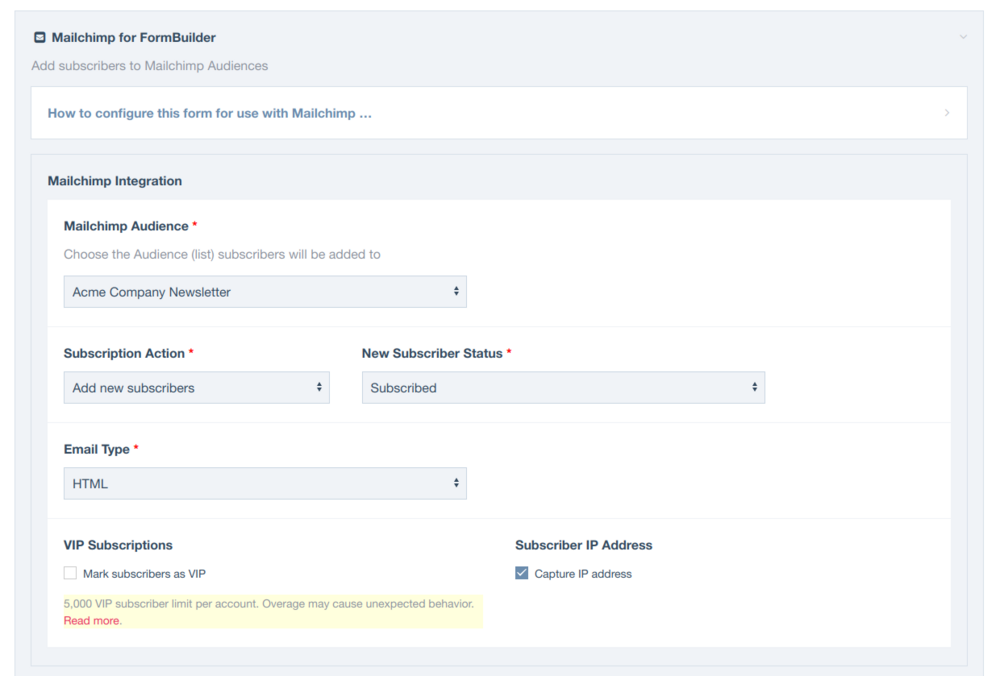

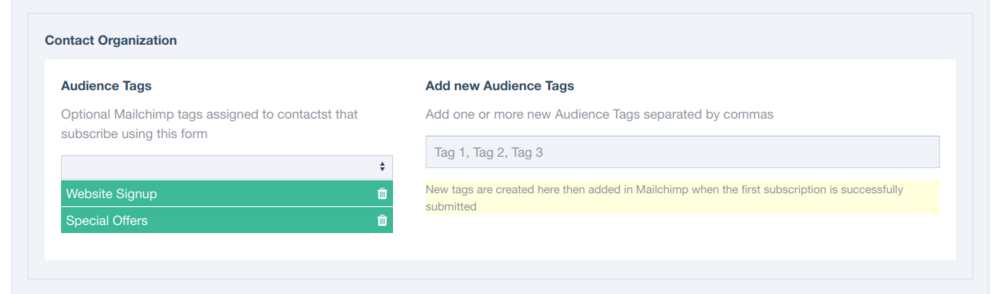

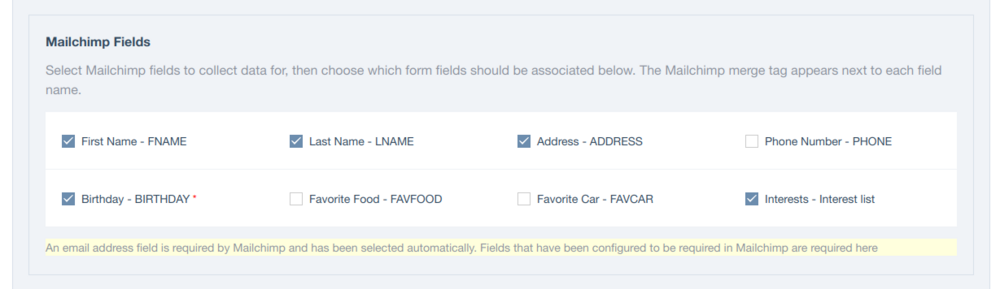

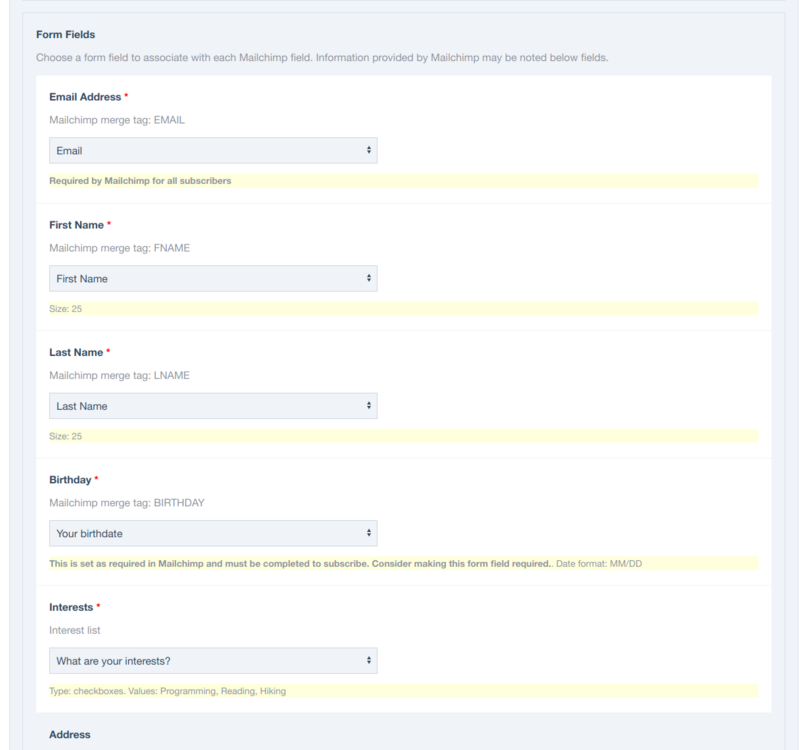

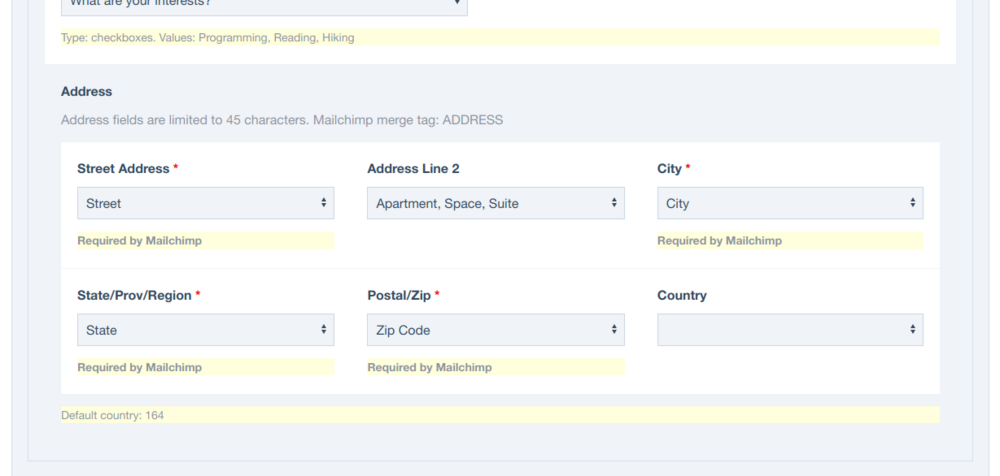

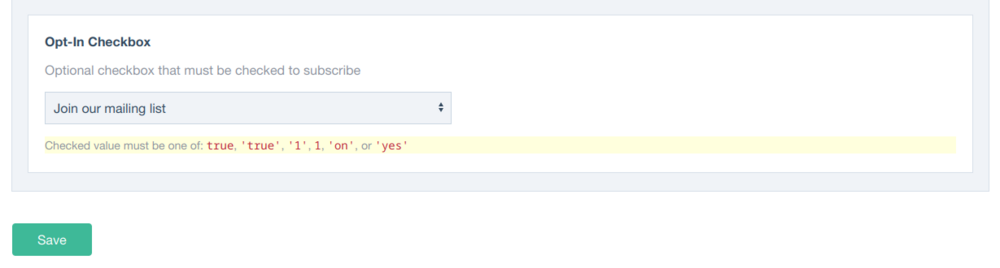

Hello! Mailchimp is a popular email marketing platform and one that is used more often than any alternative by my clients. After having to work with embedding HTML and creating generic Textarea fields to output to pages, I wanted to create a better experience and robust form management. Mailchimp for Formbuilder is a module that adds a new form processing Action to form configuration in FormBuilder. It's intended to be easy to use for both ProcessWire developers and end users while being very configurable. This module lets you do more in ProcessWire faster with great feature parity. Here are some module features: Create new FormBuilder forms that collect Mailchimp subscription signups Add Mailchimp subscription signup abilities to any existing FormBuilder form Each FormBuilder form is configured independently so you can create forms for specific Mailchimp needs Compatible with any type of field that can be configured in Mailchimp Manage Mailchimp settings right where you configure other FormBuilder form settings Stop pasting Mailchimp form embed codes and make subscribing to Mailchimp lists easy using your forms styled the way you want No need to create redirect pages for successful signups, let FormBuilder handle the form submission experiences Choose what actions to take, add new subscribers, update existing subscriber contact information, or unsubscribe existing contacts Logs error messages when encountered while communicating with Mailchimp, or form submission issues occur that prevent sending a subscription to Mailchimp Provides in-depth logging for each event during the subscription process while ProcessWire is in debug mode to help developers troubleshoot and/or understand what is happening behind the scenes. Mailchimp for FormBuilder aims to take advantage of the core features Mailchimp offers: Audiences (lists) - Collect subscriptions for any Audience in Mailchimp Audience Tags - Organize and segment your Mailchimp contacts with one or more tags Form Fields - Collect subscriber information using text, number, radio buttons, check boxes, drop downs, dates, addresses, website, and phone fields Interest Groups - Create Interest Group fields in Mailchimp to further organize your Contacts and create targeted campaigns Screenshots: Install the module and Mailchimp for FormBuilder, add your Mailchimp API key. Edit your form, choose your audience, save, and return to configure. In addition to configuration options, Mailchimp for FormBuilder includes robust high-level instructions under "How to configure..." that help less-technical end users manage their forms. Choose your audience, subscription action, and status. Choose whether to only accept new subscribers, add and update existing contacts, or unsubscribe. Pre-define whether a subscriber will receive HTML or plain text email, or choose a field to let people filling out your forms decide. Optionally mark subscribers as VIP, choose whether to provide Mailchimp the IP address at the time of signup. Choose from existing Audience Tags, or create new tags right in ProcessWire. Audience Tags created while editing one form makes available as an option to all forms. Choose which Mailchimp fields should receive form data, as many or as few as desired. If a field is set as required in Mailchimp, it will be required here too. Mailchimp only requires an email address for all subscribers by default. The Mailchimp fields you select appear below to choose the form field that should be associated with it. Notes appear below each field where helpful information is provided by Mailchimp to help you configure your form fields. If a field has multiple options in Mailchimp, such as dropdown/radio/checkbox fields, the values Mailchimp expects are noted so that you can ensure your fields submit the correct information. Date fields show the format that Mailchimp uses for that field, but Mailchimp for FormBuilder will automatically format your date fields to match what Mailchimp requires so no extra work required. Fields that are configured in Mailchimp as an Interest List show the value(s) your form field can submit. Collect information for complex fields such as addresses. Just choose the form field for each Mailchimp field. Required fields helps you make sure that your addresses will be accepted by Mailchimp. There is also an ability to choose a checkbox in your form that determines if a submission should be sent to Mailchimp. If a checkbox field is configured then a value is required to send your form data to Mailchimp, if it's left unchecked then the Mailchimp process is skipped entirely. This is a local field that is independently managed separately from Mailchimp. Known limitations: File upload fields can't be processed. While Mailchimp provides a file URL field that can be used in forms, the files uploaded via FormBuilder are not yet ready when this module parses submission data. Mailchimp has added GDPR settings but working with them via the API is a little more complex than expected. I'm not sure whether the GDPR features in Mailchimp are actually required for the EU, but I would like to eventually implement this to be feature-complete and more helpful for our European comrades. Considerations to keep in mind: This module makes every attempt to maintain parity between Mailchimp and ProcessWire configurations but there are reasonable limitations to be expected. Because data is being sent to a Mailchimp account from an external source (ProcessWire), it is not possible to automatically know if changes have been made in Mailchimp. Audience Tags are a good example. Tags may be created, edited, and deleted in Mailchimp but it will still accept any tags submitted due to how the API works. So if you have a form that tags subscriptions "Special Offers" but that tag is deleted or modified in Mailchimp, "Special Offers" will be re-created the next time a form is submitted. If a Mailchimp field is changed to required but the form hasn't been updated then subscriptions may be rejected. Mailchimp for FormBuilder fails silently and takes the route of logging errors rather than preventing FormBuilder from continuing to process the submission. This is to prevent data loss and interruptions for the end user as well as allow other form actions to still take place. It is recommended that forms always store submissions in the database as long as this FormBuilder processor is in use. This is an alpha release! I've built it for a project in-progress so it works as far as I've tested it. Please test thoroughly and share any bugs or issues here or by filing an issue on the Github repo. When it's had wider testing and thumbs up from users I'll submit it to the modules directory. Thanks! Download Mailchimp for FormBuilder from the Github repository here.

-

- 4

-

-

-

- formbuilder

- mailchimp

-

(and 1 more)

Tagged with:

-

@Klenkes That's a ProcessWire error for a field that is missing a value, not a Fluency error. Can you confirm that there are no empty required fields on the Fluency module config page? Fluency will not show any translation buttons unless the module has been properly configured.

- 220 replies

-

- translation

- language

-

(and 1 more)

Tagged with:

-

@Klenkes Well that's even worse... Can you confirm if the dev branch version resolves the issue?

- 220 replies

-

- translation

- language

-

(and 1 more)

Tagged with:

-

While this isn't a suggestion for the core, since it came up I want to second this. There are many great modules that would benefit from this- for both those that are currently available, and just as importantly, modules that have yet to be built. I can say for myself that there are some things that I'd love to create but it's difficult to justify considering the effort to build, maintain, and improve it without the potential to recoup some of the real costs involved. Listing the benefits of an official shop would make this comment far too long. I'm a big fan of the official Pro modules and I don't build sites without them, same has become true for those offered by @bernhard. This would also really help newcomers adopt ProcessWire if the modules directory could become a platform to browse both paid and free modules. Decoupling the Pro module purchase experience from the forums would be really helpful and I would argue foster wider adoption. I see this as a long-term sustainability initiative for the ecosystem as a whole. Maybe this could tie into ProcessWire core (hear me out) Maybe this could be supported in ProcessWire itself where the module is downloaded in ProcessWire (as free modules are currently), and then keys that are purchased in the store can be entered where modules are managed in the admin itself. Reducing the effort and friction of adopting high quality modules for more projects and providing true integrated support. I would love to install Pro modules as easily as those in the directory. I would expect, and hope, to see a per-sale fee for commercial modules sold in an official shop to both make this endeavor sustainable and to see something go back to the ProcessWire project. By that I mean I would like to build modules that buy @ryan a beer, preferably multiple because that means things are going well. There have been some great suggestions here, but I do want to take a moment for some thanks to the constant improvements that I've seen. Even the smaller quality of life changes in new versions are entirely appreciated as we enjoy a better developer experience. Cheers! ?

-

@Klenkes @markus-th Addressing issues that pop up when upgrading the module have been difficult since changing how data is stored. Can you confirm that after updating that you checked the module configuration and confirmed that all of the settings are correct? Can you download and test the version I just pushed to the dev branch? This one works for me and has changes that may address the issue.

- 220 replies

-

- translation

- language

-

(and 1 more)

Tagged with:

-

Oh heck yeah. This is awesome.

- 1 reply

-

- 3

-

-

Would be great to see .env support. I think this base change would level up ProcessWire and make it an increasingly viable application framework that fits into modern workflows. I think this would be a great idea. It would greatly help module development where modifying or appending behavior to existing elements is needed. I could see that having been useful when developing my Fluency module. I'll throw a thought in about multi-site capability after considering multi-tenancy for a Laravel project using a module. May be a nice feature but if there's a tradeoff for future features and development speed due to maintenance overhead, both for ProcessWire core and modules, it may not pencil out. I know it's not apples to apples, but creating two sites and a private API between them has worked for me in the past.

-

RockSettings - Manage common site settings like a boss.

FireWire replied to bernhard's topic in Modules/Plugins

@bernhard Wish I had more information but the only step was installing the module. I did some digging but am still coming up short. I removed the custom page classes and still got the error, so we can rule that out. Here's more detailed output after attempting to install again: The site is set up with language support, so that missing method error should not exist. I'm struggling to locate where exactly this issue is coming from because $page->localPath() is working everywhere else. Maybe this is an issue with language support installed before RockSettings is installed? I noticed this on lines 398-400 in SettingsPage.php <?php // make redirects be single-language $tpl = $rm->getRepeaterTemplate(self::field_redirects); $rm->setTemplateData($tpl, ['noLang' => true]); Shot in the dark, having trouble narrowing down where to look to find more information. -

RockSettings - Manage common site settings like a boss.

FireWire replied to bernhard's topic in Modules/Plugins

@bernhard Well, I hate to be that guy today (haha) but one more issue and I think it's related to custom page classes(?) Seems odd because DefaultPage extends Page. ProcessWire version 3.0.229 and RockSettings version 2.2.1 -

RockSettings - Manage common site settings like a boss.

FireWire replied to bernhard's topic in Modules/Plugins

@bernhard Thanks and I'll give that a shot! -

RockSettings - Manage common site settings like a boss.

FireWire replied to bernhard's topic in Modules/Plugins

@bernhard It's the strangest thing... I am trying to set it to 'Hidden'. Which works when editing in template context. But after a modules refresh, it comes back with the original visibility. That's the only steps I can come up with ? All of the fields under that 'Media' fieldset keep popping back up after a modules refresh. -

RockSettings - Manage common site settings like a boss.

FireWire replied to bernhard's topic in Modules/Plugins

@bernhard I am hiding some fields using the template context settings, but only the fields I mentioned aren't keeping those settings. All of the other fields keep the visibility settings. -

RockSettings - Manage common site settings like a boss.

FireWire replied to bernhard's topic in Modules/Plugins

Hey @bernhard Everything is working great, just having an issue with fields having their Visibility re-set to 'Open' after refreshing modules. The only affected fields are Logo, Favicon, og:image, Images, and Files. So only the fields under the 'Media' fieldset. All of the other fields keep the visibility as configured in the template settings. Using version 2.0.1 Thanks! -

@bernhard Your modules have so many features I'm still getting to know them all haha. Awesome, will keep that in mind as well. I think I'm going to need these features a lot more on the project I'm getting started on.