mtwebit

Members-

Posts

23 -

Joined

-

Last visited

Everything posted by mtwebit

-

The global config is used to delete or export existing data. E.g.: pages: template: Family export: fields: [ family_id, previous_name, family_name, family_name_variants, source_text, bibliography_ref: title, notes ] delimiter: ';' header: 1 The Dataset > Purge link below the global config can be used to clean up the dataset (pages created during import). Unfortunately, I did not use page save hooks in datasets during my projects. You may check the source code here.

-

I'm trying to store values in a FieldtypeTextUnique field using the API. Unfortunately, the following code reports no error / exception when a value is not stored because it is not unique in the DB. try { if (!$page->save($fieldname)) { ... report the error ... } } catch (\Exception $e) { .. report the exception ... } I was expecting at least an exception because of the UNIQUE SQL constraint. Any idea how to catch this error in a module that modifies page fields? I also tried the validate the saved value but things got complicated for certain field types (e.g. datetime, WireArrays). Thanks!

-

I guess you forgot to set the working directory in your crontab. You should invoke Tasker using cron this way: */2 * * * * docker exec --user nginx phpweb bash -c "cd /var/www/html/site/modules/Tasker && LD_PRELOAD=/usr/lib/preloadable_libiconv.so /usr/bin/php runByCron.php" You can skip the docker and LD_PRELOAD parts but you need to set the working directory (cd ......./Tasker). I don't really understand the other problem, sorry. Do you have trouble with the createTask() method called from your own module?

-

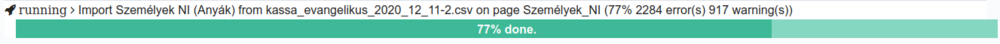

I forgot to mention it here, but it was fixed in November, last year. I completely rewrote this part of the code. There's no redirect anymore. The module will display an embedded progress bar if you run a task via the Web browser:

-

Actually, the log_messages field is created and used by Tasker not DataSet. I don't see any easy solution to handle this problem. If PW core gets noindex support then I'll use that. The best thing you can do atm is to turn off profiling and debugging in Tasker in a production system (this is independent from PW settings). It is also a good practice to delete tasks after they are finished and create new ones when needed.

-

Although LazyCron and web-based task execution are supported, tasks should be invoked by command line tools (e.g. Unix cron), not from the the Web server environment. See the wiki. Tasker uses process control functions for checking signals (e.g. an execution timer) which should be safe to use in a webserver environment but the code also monitors the SIGINT and SIGTERM interrupts that should be moved to the command line specific part. And it seems I need to check the availability of that function. Thanks for pointing this out.

-

I haven't used the DataSet XML import for a while. Let me know if it needs some polishing.

-

Thanks for reporting this. Probably a core compatibility issue. Fixed now. Pull the updated module from github or delete from your site/modules and reinstall it.

-

Thanks for the feedback! I'm glad to hear that they are useful ? although a bit complex to use. Tasker has a few small improvements, I think I pushed the latest version to the GitHub repo. DataSet changed a bit more, and some modified parts still need review and testing. Thanks for reminding me to finish them. My DataSet project is still running. We have like 150k+ (mostly complex) data pages interconnected with many references and getting to hit the wall with MySQL during imports and complex page reference lookups.

-

I use page references heavily in my projects. Page Autocomplete has a field (Settings specific to ...) on the Input tab of the field settings page that can be used to specify what fields are used during the query. You can even select multiple fields, e.g. a category_ref_by_id field can specify multiple ID fields. This way you can merge individual data sets into a single one. Each source set can have its own ID, and the ...ref_by_id field can use all of them. I have no plans for the automatic creation of the missing referenced page but it can be achieved very easily. Just create another DataSet using the same CSV file and import the appropriate "category" columns for creating the missing pages. You can also try to use the location attribute in the DataSet config to make a reference to the file uploaded to the original DataSet (see the wiki) to avoid duplicate uploads. If you need to perform these imports automatically you can create two tasks (category import and the original one) and specify a dependency between them (first import categories then the full data set). See Tasker wiki.

-

OK. It was time to update the wiki ? I've uploaded a new DataSet version (0.9.5) to GitHub. It contains many improvements for data type conversions, page reference handling and several bug fixes. It also has a new profiler to optimize the import routines. Tasker is also updated.

-

I've uploaded a new version (0.9.5) to GitHub. It tries to handle DB connection loss errors and it has a basic profiler to optimize your import routines. See the wiki for more details. Note: TaskerAdmin needs some fixes in its JS-based task executor. Don't use this feature atm. (Cron is always the preferred task execution method.)

-

By default DataSet will create a new PW page each time it imports a row. In the above example, two pages will be created with title "Orange" and one with "Banana". There is no option to change the title for the new page (2nd Orange) if it matches an already existing one (1st Orange). You can, however, combine several fields in the title making it unique. E.g. you can create the title like this (column #0 always contains the row's serial number): title: [1, ' (', 0, ')'] The result will be: Orange (1) Orange (2) Banana (3) You can also update (overwrite or merge) already existing pages. In the "pages" section of DS config you can specify a selector and add the overwrite or merge option. See the wiki for more details. (Which needs to be updated but it is probably still helpful ? )

-

I've checked the above config on my DataSet test site and it is valid. (Don't forget to save the page to run the validator again.)

-

Thanks for the hint. I've implemented the prefix-based workaround.

-

JSON rule format is now supported but I have a small problem with that. It works fine in the global rule field but storing JSON in file descriptions is not possible atm. Pagefile uses JSON internally for storing multi-language file descriptions so it is not possible to store JSON data there... I could not find a way to overcome this issue (even if multi-language descriptions are disabled Pagefile still drops JSON descriptions). Any idea? See Github issue

-

Thanks for the screencast, @flydev. I really appreciate it. Regarding the pcntl on Windows... Unfortunately, I don't have experience in this field. Windows has no signals (as far as I know) so I'd use a virtualization tool to overcome this limitation. You can try an API/kernel virtualization (e.g. Linux subsys for Windows) or use Docker. Also thanks for the pull req. I'll check your suggestions.

-

I was thinking about this too... There was a dev branch that dropped the [file + rules in description] scheme and introduced a fieldset of [rule + (optional) file]. It turned out to be too complicated and it did not work well so I dropped it. An easy solution is to allow source location override. So... see this commit and use the input:location configuration option. Not the best solution as it still requires a (dummy) file to be uploaded (to create the import rules in its description), but it works. You can even use this solution to refer to files uploaded to other pages using this URL scheme: wire://pageid/filename Hope it helps. That's different. It downloads data for a single field (e.g. a file to be stored in a filefield) not for an entire DataSet.

-

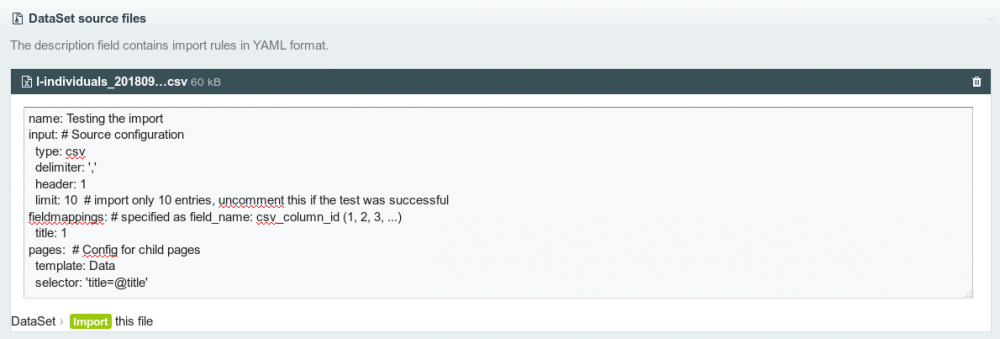

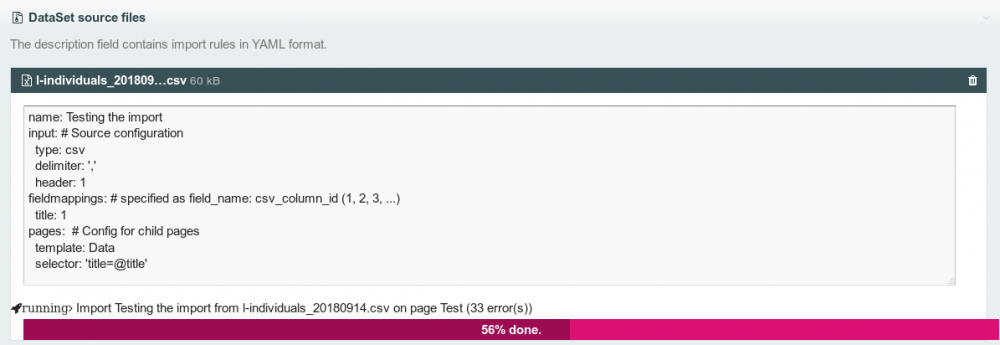

I've created a set of modules for importing (manipulating and displaying) data from external resources. A key requirement was to handle large (100k+) number of pages easily. Main features import data from CSV and XML sources in the background (using Tasker) purge, update or overwrite existing pages using selectors user configurable input <-> field mappings on-the-fly data conversion and composition (e.g. joining CSV columns into a single field) download external resources (files, images) during import handle page references by any (even numeric) fields How it works You can upload CSV or XML files to DataSet pages and specify import rules in their description. The module imports the content of the file and creates/updates child pages automatically. How to use it Create a DataSet page that stores the source file. The file's description field specifies how the import should be done: After saving the DataSet page an import button should appear below the file description. When you start the import the DataSet module creates a task (executed by Tasker) that will import the data in the background. You can monitor its execution and check its logs for errors. See the module's wiki for more details. The module was already used in three projects to import and handle large XML and CSV datasets. It has some rough edges and I'm sure it needs improvement so comments are welcome.

- 38 replies

-

- 18

-

-

Thanks for the feedback. I'll definitely have a look at InputfieldMarkup and wirequeue. It is possible to integrate the UI elements into other pages. There's a renderTaskList() method to perform this: if(wire('modules')->isInstalled("TaskerAdmin")) { $out .= '<h3>Tasks</h3>'; $out .= wire('modules')->get('TaskerAdmin')->renderTaskList($selector); } It can render a task list or a detailed task monitoring div including JS code to show the progressbar or even to execute the task when displaying the page. It needs improvement, however, as its links point to the TaskerAdmin page atm and the list UI is not really customizable.

-

Tasker is a module to handle and execute long-running jobs in Processwire. It provides a simple API to create tasks (stored as PW pages), to set and query their state (Active, Waiting, Suspended etc.), and to execute them via Cron, LazyCron or HTTP calls. Creating a task $task = wire('modules')->Tasker->createTask($class, $method, $page, 'Task title', $arguments); where $class and $method specify the function that performs the job, $page is the task's parent page and $arguments provide optional configuration for the task. Executing a task You need to activate a task first wire('modules')->Tasker->activateTask($task); then Tasker will automatically execute it using one of its schedulers: Unix cron, LazyCron or TaskerAdmin's REST API + JS client. Getting the job done Your method that performs the task looks like public function longTask($page, &$taskData, $params) { ... } where $taskData is a persistent storage and $params are run-time options for the task. Monitoring progress, management The TaskerAdmin module provides a Javascript-based front-end to list tasks, to change their state and to monitor their progress (using a JQuery progressbar and a debug log area). It also allows the on-line execution of tasks using periodic HTTP calls performed by Javascript. Monitoring task progress (and log messages if debug mode is active) Task data and log Detailed info (setup, task dependencies, time limits, REST API etc.) and examples can be found on GitHub. This is my first public PW module. I'm sure it needs improvement

- 13 replies

-

- 16

-

-

I was looking for a module that allows the execution of long-running tasks (working with tens of thousands of pages) and could not find a suitable solution. It started with the import problem. I have lots of data in XML form (20k+ complex entries) that I want to import into ProcessWire. XMLReader() works fine but it takes a very long time to import all data so a simple "upload + process data on page save" would not work. So I've created a new module for this. Meet my first (well, third) PW module: Tasker. It's a simple module that executes long tasks in the background (using Cron or LazyCron) and reports their status to the user using a JQuery progressbar. Any suggestions are welcome. How do you solve similar problems? E.g. which is the best way to delete large number of pages? (max_exec_time will expire so I check it before the delete() call.) $children = $page->children('template='.$this->template.',include=all'); // creates lot of page objects, may not have time to delete all + ...->delete() OR $childIDs = $this->pages->findIDs('parent='.$page->id.',template='.$this->template.',include=all'); // will create page objects later + $pages->get(..id..)->delete() Be nice (I know you're always), I'm a PW-newbie, just started working with ProcessWire. I was developing sites with Drupal for a very long time but their non-existent module upgrade path finally has driven me away from it.

-

I face a similar problem, 20k+ entries from external sources. XMLReader() is quite fast and has low memory usage. It's also pretty simple if you understand its logic. $xml = new \XMLReader(); $xml->open($file->filename); while($xml->next('tagName')) { if ($xml->nodeType != \XMLReader::ELEMENT) continue; // skip the end element ... process attributes ... $xml->getAttribute('attr') ... inner or outer XML ... $xml->readOuterXML() ... you can even convert it to SimpleXML or DOM }