djr

Members-

Posts

12 -

Joined

-

Last visited

About djr

- Birthday 08/06/1996

Profile Information

-

Gender

Male

-

Location

Scotland

djr's Achievements

Jr. Member (3/6)

28

Reputation

-

Oh. That tells me it's using the native mysqldump (not the php implementation), but it's still failing. Perhaps the file permissions don't allow creating a new file (data.sql) in the root of your site? I should probably add a check for that.

-

@tyssen, @jacmaes: released 0.0.2 which has a pure-php fallback for mysqldump and tar. Give it a go

-

djr started following Module: ScheduleCloudBackups (back up site to S3)

-

@tyssen, @jacmaes: Most likely the server doesn't have the mysqldump utility available. It's possible to add a pure-PHP fallback (Pete's ScheduleBackups did) but it will probably be considerably slower than real mysqldump. I'll see about adding it soon, but I'm a bit busy today.

-

Now available on the module directory: http://modules.processwire.com/modules/schedule-cloud-backups

-

At the moment it doesn't have any knowledge of Glacier, but you should be able to use S3's Object Lifecycle Management system to automatically transfer backups from S3 to Glacier.

-

Thanks Ryan. Re your suggestions: (I've made some changes to the code) The data.sql file is already protected from web access by the default PW .htaccess file, and I've added a .htaccess file in the module directory to prevent access to the backup tarball. I've changed the shouldBackup check to be more specific (behaves the same as your suggestion, but simpler logic). I don't know what the issues around conditional autoloading in PW 2.4 are, so I'll leave that for now (?). I'll put IP whitelisting on the todo list, but I don't think it's essential right now, since it's unlikely anybody would be able to guess the secret token in the URL. The `wget` command for the cron job is displayed on the module config page (prefilled with URL). Would it be better to have the cron job run a PHP file directly rather than going through the web server? Not sure. I've added a little mention of the requirements in the readme. I've also adjusted the install method to check it can run tar and mysqldump. I'll submit it to the module directory shortly

-

Hello I've written a little module that backs up your ProcessWire site to Amazon S3 (might add support for other storage providers later, hence the generic name). Pete's ScheduleBackups was used as a starting point but has been overhauled somewhat. Still, it's far from perfect at the moment, but I guess you might find it useful. Essentially, you set up a cron job to load a page every day, and then the script creates a .tar.gz containing all of the site files and a dump of the database, then uploads it to an S3 bucket. Currently, only linux-based hosts are supported currently (hopefully, most of you). The module is available on github: https://github.com/DavidJRobertson/ProcessWire-ScheduleCloudBackups Zip download: https://github.com/DavidJRobertson/ProcessWire-ScheduleCloudBackups/archive/master.zip Let me know what you think EDIT: now available on the module directory @ http://modules.processwire.com/modules/schedule-cloud-backups

- 20 replies

-

- 15

-

-

"Continuous integration" of Field and Template changes

djr replied to mindplay.dk's topic in General Support

I'm really not sure what the issue is with writeable files in regards to security. If the server is compromised in a way that would allow an attacker to modify these files, then you're already screwed as they could modify the code as well. Also in that case they would be able to access the database. The only issue I can see is files having incorrect permissions modes, which could be easily detected and warned about in the admin interface. (Additionally, if I know that I will never want to make any metadata changes directly on the production site, I could set a configuration option (or something) to disable the editing interface for fields/templates, and then also make the files non-writeable. Though obviously this scenario won't apply to everyone.) We already have an issue of inconsistency: the template PHP code may not be consistent with the data structure. If the template/field config is stored in files this issue disappears. But with this model, if the metadata somehow becomes out of sync with the data, then the only real issue is going to be that some data might not be present (which would have been an issue anyway). In fact it's arguable that it's not really possible for it to become out of sync, since the schema files are the authoritative source. If a field has been added, then everything just works, apart from obviously old pages won't have data for the new field. If a field is removed, not a problem, it's just not used any more. The data would still be there but orphaned (see bottom of this post re: cleanup task). If a field type is changed, the old data is again left in the old fieldtype table, and everything should just work. The whole idea was to avoid needing to change the database schema at all. Changing the field/template configuration to something incorrect could break the site until it's corrected, but would not cause any data loss. Since the files on disk would represent the desired state of the data, not the changes required to achieve that state, destructive actions would not be performed automatically. I imagine a cleanup task would be necessary to remove old data which is no longer required (e.g. when a field is removed). This would be run manually from the admin interface, so user confirmation would still be required. -

"Continuous integration" of Field and Template changes

djr replied to mindplay.dk's topic in General Support

Okay so what happens if: I take a copy of the production site (incl. database) and make some changes on my local machine. At the same time my client logs in and edits something, or the production database is otherwise altered. Then, I dump my db, and upload it and the files to the production server. You have just overwritten your client's changes. If you can ensure that they will not make any changes during the time between you taking a copy, and uploading the changed database, it works fine. Unfortunately I cannot make this guarantee. Additionally, since the metadata is in the database, rather than files, it will not be tracked by your version control system (assuming you're using one). This also makes collaborating with other developers much harder than it needs to be. -

"Continuous integration" of Field and Template changes

djr replied to mindplay.dk's topic in General Support

@teppo: Ah, of course, selectors could be a problem. I'm not familiar enough with PW to know how this could work properly. And you're right, reusable fields are convenient. So I guess we could have global fields as it is in the current codebase. That would also minimize potential issues in migrating existing code which makes the assumption that fields are global, not local to each template. However, the fields could still be stored in the filesystem rather than in the database. That doesn't need to get in the way of the GUI tools in the admin interface to administer fields/templates. They could function as they do now, just storing the metadata in files instead of the database. There doesn't even have to be any user interface change at all really, so the experience of rapidly building data structures can stay- just with some extra benefits: ease of code version control, collaboration, and deployment. I'm not sure how it will perform compared to the current system. I wouldn't expect there to be any noticeable difference for small sites, but for very large datasets, I don't know. Might be worth testing. -

"Continuous integration" of Field and Template changes

djr replied to mindplay.dk's topic in General Support

It's definitely possible, but I'm not convinced it's the best way to do it. I was talking about changing the PW model. Your system essentially functions like a database (schema) migration tool. What I'm suggesting is avoiding the need to change the database schema at all. I'm imagining that we could create two files for each template: one is the actual template PHP code (as normal), and the other is a file containing the template and field settings that PW currently stores in the database. A very rough example using JSON as the metadata format: https://gist.github.com/DavidJRobertson/2db2dc82107d2faacb0b (quite crude and messy, this is essentially just a dump of all the relevant data from the default-site. Presumably some defaults could be assumed so not everything in that gist would need to be specified. Or perhaps another php file (with a custom extension could) be used to configure the template programmatically.) If fields don't need their own table, but use a table dedicated to their fieldtype, we do not need to make any modifications to the database for this to work. -

"Continuous integration" of Field and Template changes

djr replied to mindplay.dk's topic in General Support

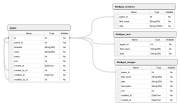

I've been thinking about this issue lately also. I want the template/field metadata to be stored in files, not the database, since the metadata is closer to code than data. I think that using some sort of system to replay changes is a bit of a cop-out. I'd much prefer if no database changes were necessary. This means: 1. Template metadata has to be moved out from the templates table to files on disk. Easy enough. 2. Field metadata has to be moved out of the database. This is a bit trickier than the template metadata. The data in the fields table could easily go in a file, but the issue is the field_<name> tables that are created for each field. Obviously, you can't just use a single generic table as it won't have the correct columns etc. My first thought was that using a schemaless/"NoSQL" document database (e.g. mongo) would be a great solution to this problem. Just have a single pages collection, and store the field data as embedded documents. The field metadata can be moved out to a file, and since the database is schemaless, there is no issue with having the wrong database schema for the fields. I have been playing with this idea over the past few days. It has a number of issues- it would be a lot of work to change the whole codebase to use a schemaless database; such database systems aren't as readily available as MySQL is; ... So, this morning it struck me that there was an easier way to accomplish the desired effect (without the need to change to a schemaless database system). The issue with putting all of the field metadata in files is that the field_<name> tables still need to be created with the correct schema. But actually, do we really need these field_<name> tables? I don't think so. Instead we can have a table for each fieldtype (created at install) which stores all fields of that type. The rows of these tables are linked to the row in the pages table. This also means that fields do not have to be a global construct, but can be specific to each template (e.g. two templates could have fields with the same name but different type or other configuration difference). I feel that this is a more natural way to model the data. I guess the schema would look a bit like this: Any thoughts?